See EDIT

I tried to implement the gaussian blur algorithm on my own in MATLAB instead of using the built-in algorithm for reasons of understanding it in detail.

I found an interesting implementation and someone has already asked how to code that kind of algorithm. So that is not the business.

Furthermore I use the following formula to calculate the standard deviation of given radius like GIMP does:

stdDeviation = sqrt(-(radius^2) / (2*log10(1 / 255)));

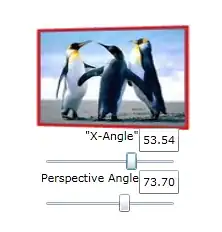

My algorithm works for small values of radius (e.g. 3,5,7) without any problems (you are not able to see the difference at least). If I try to blur an image with the radius of 21, the output is the following:

Compared to GIMP´s / MATLAB´s imgaussfilt(A,sigma) output:

Obviously the algorithms compute not the same (or at least similar) output image. What does GIMP / MATLAB´s imgaussfilt(A,sigma) do apart from that?

The image´s border can be neglected. I'm aware of that problem. But I do not understand the origin of the 'strange pixel stripes' in my output image.

For reasons of completeness, here is the source code:

function y = gaussianBlurSepTest(inputImage)

% radius in pixel; RADIUS MUST BE ODD!

radius = 21;

% approximate value for standard deviation

stdDeviation = sqrt(-(radius^2) / (2*log10(1 / 255)));

ind = -floor(radius/2):floor(radius/2);

[X, Y] = meshgrid(ind, ind);

h = exp(-(X.^2 + Y.^2) / (2*stdDeviation*stdDeviation));

h = h / sum(h(:));

redChannel = inputImage(:,:,1);

greenChannel = inputImage(:,:,2);

blueChannel = inputImage(:,:,3);

redBlurred = conv2(redChannel, h);

greenBlurred = conv2(greenChannel, h);

blueBlurred = conv2(blueChannel, h);

y = cat(3, uint8(redBlurred), uint8(greenBlurred), uint8(blueBlurred));

EDIT:

For the sake of completeness and to help others: I applied erfan´s modifications. The result is much better now, but one can still see an obvious difference to gimp´s calculations. GIMP´s result looks 'smoother'.

The implemented algorithm:

function y = gaussianBlurSepTest(inputImage)

radius = 21;

stdDeviation = sqrt(-(radius^2) / (2*log10(1 / 255)));

ind = -floor(radius/2):floor(radius/2);

[X, Y] = meshgrid(ind, ind);

[~, R] = cart2pol(X, Y); % Here R is defined

h = exp(-R.^2 / (2*stdDeviation*stdDeviation));

h = h / sum(h(:));

h(R > radius/2) = 0;

h = h / sum(h(:));

redChannel = inputImage(:,:,1);

greenChannel = inputImage(:,:,2);

blueChannel = inputImage(:,:,3);

redBlurred = conv2(redChannel, h);

greenBlurred = conv2(greenChannel, h);

blueBlurred = conv2(blueChannel, h);

y = cat(3, uint8(redBlurred), uint8(greenBlurred), uint8(blueBlurred));

In terms of answering the question completely and to help others with the same question I think it might be useful to ask for the origin of the differences.

Thank you!