Due to a seeming lack of a decent 2D histogram for CUDA (that I can find... pointers welcome), I'm trying to implement it myself with pyCUDA.

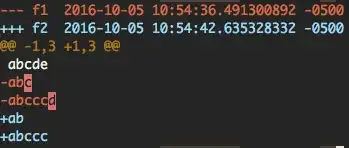

Here's what the histogram should look like (using Numpy):

Here's what I've got so far:

code = '''

__global__ void histogram2d(const float *in_x, const float *in_y, const float *in_w, float *out) {{

int start = blockIdx.x * blockDim.x + threadIdx.x;

float *block_out = &out[{xres} * {yres} * {num_chans} * blockIdx.x];

for(int i = 0; i < {length}; i++) {{

float x = in_x[start + i];

float y = in_y[start + i];

int w_idx = (start + i) * {num_chans};

int xbin = (int) (((x - {xmin}) / {xptp}) * {xres});

int ybin = (int) (((y - {ymin}) / {yptp}) * {yres});

if (0 <= xbin && xbin < {xres} && 0 <= ybin && ybin < {yres}) {{

for(int c = 0; c < {num_chans}; c++) {{

atomicAdd(&block_out[(ybin * {xres} + xbin) * {num_chans} + c], in_w[w_idx + c]);

}}

}}

}}

}}

'''.format(**args)

------

__global__ void histogram2d(const float *in_x, const float *in_y, const float *in_w, float *out) {

int start = blockIdx.x * blockDim.x + threadIdx.x;

float *block_out = &out[50 * 50 * 4 * blockIdx.x];

for(int i = 0; i < 100; i++) {

float x = in_x[start + i];

float y = in_y[start + i];

int w_idx = (start + i) * 4;

int xbin = (int) (((x - -10.0) / 20.0) * 50);

int ybin = (int) (((y - -10.0) / 20.0) * 50);

if (0 <= xbin && xbin < 50 && 0 <= ybin && ybin < 50) {

for(int c = 0; c < 4; c++) {

atomicAdd(&block_out[(ybin * 50 + xbin) * 4 + c], in_w[w_idx + c]);

}

}

}

}

There seems to be a problem with the indexing, but I haven't done much pure CUDA before, so I can't tell what it is. Here's what I think the equivalent python would be:

def slow_hist(in_x, in_y, in_w, out, blockx, blockdimx, threadx):

start = blockx * blockdimx + threadx

block_out_addr = args['xres'] * args['yres'], args['num_chans'] * blockx

for i in range(args['length']):

x = in_x[start + i]

y = in_y[start + i]

w_idx = (start + i) * args['num_chans']

xbin = int(((x - args['xmin']) / args['xptp']) * args['xres'])

ybin = int(((y - args['ymin']) / args['yptp']) * args['yres'])

if 0 <= xbin < args['xres'] and 0 <= ybin < args['yres']:

for c in range(args['num_chans']):

out[(ybin * args['xres'] + xbin) * args['num_chans'] + c] += in_w[w_idx + c]

All the code is viewable, including these images, at the Github page of this notebook (this cell is at the bottom).

What am I doing wrong in this CUDA code? I've tried lots of little tweaks (striding the atomicAdd address by 1, 4, 8, 16, transposing outputs, etc.), but it seems like I'm missing something subtle, probably in how the pointer arithmetic is working. Any help would be appreciated.