Check the update at the bottom of the question

Summary: I have a dataset that does not behave linearly. I am trying to use Spark's MLlib(v1.5.2) to fit a model that behaves more as a polynomial function but I always get a linear model as a result. I don't know if it's not possible to obtain a non-linear model using a linear regression.

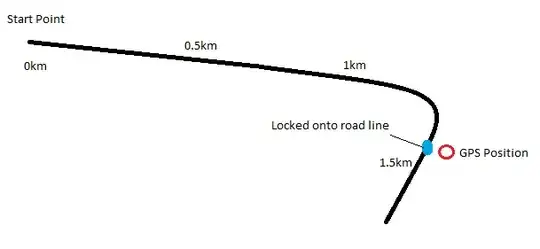

[TL;DR] I am trying to fit a model that represents sufficiently good the following data:

My code is very simple (pretty much like in every tutorial)

object LinearRegressionTest {

def main(args: Array[String]): Unit = {

val sc = new SparkContext("local[2]", "Linear Regression")

val data = sc.textFile("data2.csv")

val parsedData = data.map { line =>

val parts = line.split(',')

LabeledPoint(parts(1).toDouble, Vectors.dense(parts(2).toDouble))

}.cache()

val numIterations = 1000

val stepSize = 0.001

val model = LinearRegressionWithSGD.train(parsedData, numIterations, stepSize)

sc.stop

}

}

The obtained results are in the right range however they are always in a monotonically increasing line. I am trying to wrap my head around it but I cannot figure it out why a better curve is not being fitted.

Any tips?

Thanks everyone

Update

The problem was caused by the version of the spark and spark-ml libraries that we were using. For some reason, version 1.5.2 was not fitting a better curve even though I provided more features (squared or cubic versions of the input data). After upgrading to version 2.0.0 and switching from the deprecated LinearRegressionWithSGD to LinearRegression of the main API (not the RDD API) the algorithm behaved as expected. With this new method the model fitted the right curve.