(I'm asking this in context of OpenCV, but answers using other libraries or general computer vision techniques are welcome)

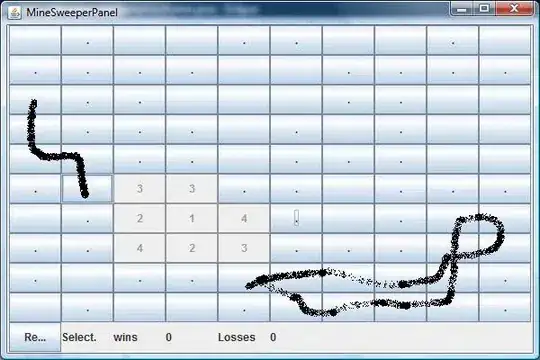

When doing background subtraction such as using MOG2, there are often gaps in an object so if you were to apply the mask, there would be missing data (see uploaded sample mask below). What's a good way in OpenCV (preferably in Python) to "close" the edges and then fill in mask inside the closed edges (see uploaded example image which I created manually)? A key issue is that when a chunk is missing out of the edge, OpenCV needs to know how to continue the edge to close and fill that chunk.

For example, if using the background subtraction to detect walking people, and then applying the mask to the image and doing this "fill in", the end result would be a person masked out of the background without any chunks missing out of their body or clothing unlike if the mask were just applied without first doing this.

I understand there may be some cases where too much of their skin or clothing matches the background and so the approach may not work perfectly in all cases, but I'm at least looking for an approach that works pretty well.

The goal behind all this is to be able to extract moving objects so that HAAR/LBP matching can be run on them to identify them more efficiently (due to finding the regions of interest) and accurately (due to removing any possible background confusion).

ORIGINAL

IDEALLY WOULD BECOME SOMETHING LIKE THIS