IF OBJECT_ID('TEMPDB.DBO.#language_check', 'U') IS NOT NULL

DROP TABLE #language_check;

CREATE TABLE #language_check (

id INT identity(1, 1) NOT NULL,

language_Used VARCHAR(50),

uni_code NVARCHAR(2000)

)

INSERT INTO #language_check (

language_Used,

uni_code

)

VALUES (

'English',

N'Hello world! this is a text input for the table'

),

(

'German',

N'Gemäß den geltenden Datenschutzgesetzen bitten wir Sie, sich kurz Zeit zu nehmen und die wichtigsten Punkte der Datenschutzerklärung von Google durchzulesen. Dabei geht es nicht um von uns vorgenommene Änderungen, sondern darum, dass Sie sich mit den wichtigsten Punkten vertraut machen sollten. Sie können Ihre Einwilligung jederzeit mit Wirkung für die Zukunft widerrufen.'

),

(

'Spanish',

N'Bajo Rs 30.000, tanto los teléfonos tienen sus propios méritos y deméritos. El OnePlus 3 puntuaciones claramente muy por delante del Xiaomi MI 5 y también mucho más que muchos teléfonos inteligentes insignia en el mercado.'

),

(

'Czech',

N'To je příklad.'

),

(

'Arabic',

N'هذا مثال على ذلك.'

)

SELECT *

FROM #language_check

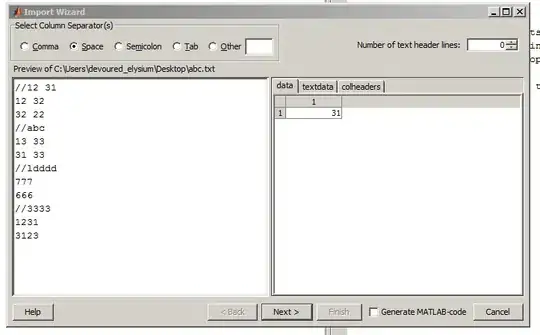

This will results as follows.

then added a varchar column to the above table and the result as follows.

when i insert check the result, both uni_code and nonunicode columns have same values , but for the arabic language nonunicode column was "?????".

- can any one elaborate the reason behind the same value for uni_code and nonunicode columns.

- why for arabic language both columns were not same.

thanks in advance