This question is about making any nnGraph network run on multiple GPUs and not specific to the following network instance

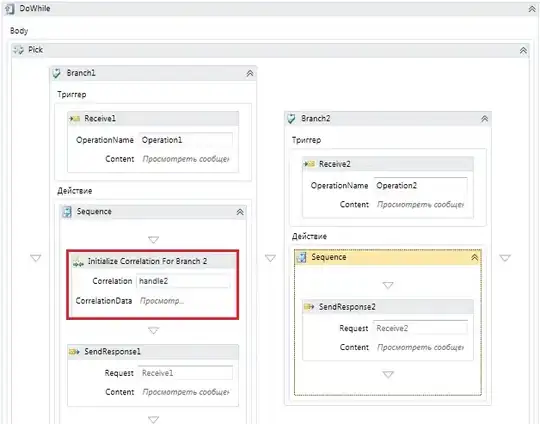

I am trying to train a network which is constructed with nnGraph. The backward diagram is attached. I am trying to run the parallelModel (see code or fig Node 9) in a multi-GPU setting. If I attach the parallel model to a nn.Sequential container and then create a DataParallelTable it works in a multi-GPU setting (without nnGraph). However, after attaching it to nnGraph I get an error. The backward pass works if I train on a single GPU (setting true to false in the if statements), but in a multi-GPU setting I get an error "gmodule.lua:418: attempt to index local 'gradInput' (a nil value)". I think Node 9 in backward pass should run on multiple-GPUs, however that's not happening. Creating DataParallelTable on nnGraph didn't work for me, however I thought atleast putting internal Sequential networks in a DataParallelTable would work. Is there some other way to split the initial data which is being passed to nnGraph so that it runs on multiple-GPUs?

require 'torch'

require 'nn'

require 'cudnn'

require 'cunn'

require 'cutorch'

require 'nngraph'

data1 = torch.ones(4,20):cuda()

data2 = torch.ones(4,10):cuda()

tmodel = nn.Sequential()

tmodel:add(nn.Linear(20,10))

tmodel:add(nn.Linear(10,10))

parallelModel = nn.ParallelTable()

parallelModel:add(tmodel)

parallelModel:add(nn.Identity())

parallelModel:add(nn.Identity())

model = parallelModel

if true then

local function sharingKey(m)

local key = torch.type(m)

if m.__shareGradInputKey then

key = key .. ':' .. m.__shareGradInputKey

end

return key

end

-- Share gradInput for memory efficient backprop

local cache = {}

model:apply(function(m)

local moduleType = torch.type(m)

if torch.isTensor(m.gradInput) and moduleType ~= 'nn.ConcatTable' then

local key = sharingKey(m)

if cache[key] == nil then

cache[key] = torch.CudaStorage(1)

end

m.gradInput = torch.CudaTensor(cache[key], 1, 0)

end

end)

end

if true then

cudnn.fastest = true

cudnn.benchmark = true

-- Wrap the model with DataParallelTable, if using more than one GPU

local gpus = torch.range(1, 2):totable()

local fastest, benchmark = cudnn.fastest, cudnn.benchmark

local dpt = nn.DataParallelTable(1, true, true)

:add(model, gpus)

:threads(function()

local cudnn = require 'cudnn'

cudnn.fastest, cudnn.benchmark = fastest, benchmark

end)

dpt.gradInput = nil

model = dpt:cuda()

end

newmodel = nn.Sequential()

newmodel:add(model)

input1 = nn.Identity()()

input2 = nn.Identity()()

input3 = nn.Identity()()

out = newmodel({input1,input2,input3})

r1 = nn.NarrowTable(1,2)(out)

r2 = nn.NarrowTable(2,2)(out)

f1 = nn.JoinTable(2)(r1)

f2 = nn.JoinTable(2)(r2)

n1 = nn.Sequential()

n1:add(nn.Linear(20,5))

n2 = nn.Sequential()

n2:add(nn.Linear(20,5))

f11 = n1(f1)

f12 = n2(f2)

foutput = nn.JoinTable(2)({f11,f12})

g = nn.gModule({input1,input2,input3},{foutput})

g = g:cuda()

g:forward({data1, data2, data2})

g:backward({data1, data2, data2}, torch.rand(4,10):cuda())

The code in the "if" statements is taken from Facebook's ResNet implementation