I have a dataset that has two columns: company, and value.

It has a datetime index, which contains duplicates (on the same day, different companies have different values). The values have missing data, so I want to forward fill the missing data with the previous datapoint from the same company.

However, I can't seem to find a good way to do this without running into odd groupby errors, suggesting that I'm doing something wrong.

Toy data:

a = pd.DataFrame({'a': [1, 2, None], 'b': [12,None,14]})

a.index = pd.DatetimeIndex(['2010', '2011', '2012'])

a = a.unstack()

a = a.reset_index().set_index('level_1')

a.columns = ['company', 'value']

a.sort_index(inplace=True)

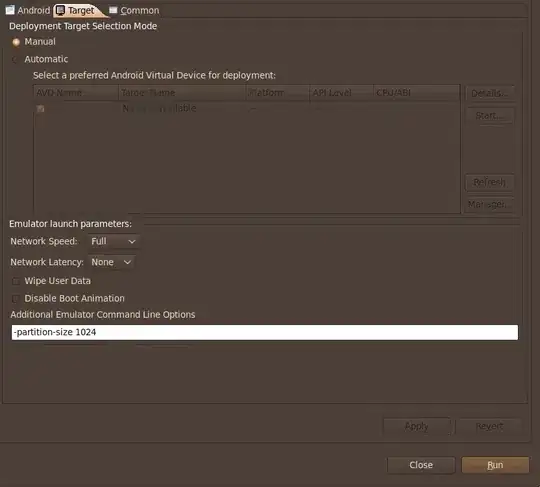

Attempted solutions (didn't work: ValueError: cannot reindex from a duplicate axis):

a.groupby('company').ffill()

a.groupby('company')['value'].ffill()

a.groupby('company').fillna(method='ffill')

Hacky solution (that delivers the desired result, but is obviously just an ugly workaround):

a['value'] = a.reset_index().groupby(

'company').fillna(method='ffill')['value'].values

There is probably a simple and elegant way to do this, how is this performed in Pandas?