Im looking for a solution for what appears to be a complex problem. Basically, I need to find the fastest and most reliable way to create one "Master" database (system of records) based on data from multiple other "Distributed" systems of records. Let me break down the problem: I have N system of records that each have a web service attached and respond to requests for data. Every one of the N web services has standardized the response object schema, so the XML/JSON response data schema is exactly the same for each web service. The mission is to create one single "Master" database that is a reflection of all the N system of records in the fastest way possible. (Basically, a merge of all N databases periodically through some procedure/application/program/etc.).

The only way to obtain the data from each of the "distributed" system of records is by interacting with their respective web services. This is the worldwide standard agreed upon by all of the N system of record owners.

What is the most efficient way to tackle this problem?

Here is one approach that was considered, but I think this approach is not optimal: Creating a C# program that will concurrently/asynchrounously request data from each of the web services, and push that data into a staging environment in SQL Server. SQL Server would then run procedures to merge that data into a master database (Currently this would take 17 hours to complete, obviously this is a long time)

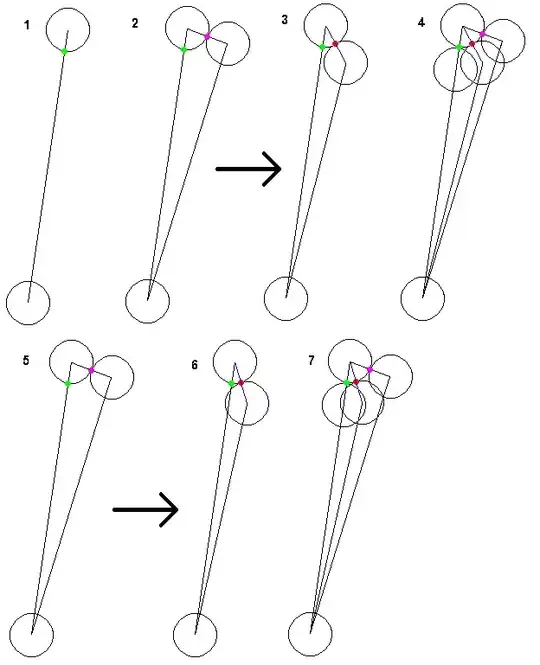

Here is a basic drawing of the moving parts, the "??" in the middle represents the solution