Very weird behavior, but I have narrowed the problem down as far as I can go I think

I have a NSImage, let's call it inputImage. It is represented by a NSBitmapImageRep in a CGColorSpaceCreateDeviceGray, if that matters

I want to create a CGImageRef from it, but with inverted colors.

NSData *data = [inputImage TIFFRepresentation];

CGDataProviderRef provider = CGDataProviderCreateWithCFData((__bridge CFDataRef)data);

NSBitmapImageRep *maskedRep = (NSBitmapImageRep*)[inputImage representations][0];

CGFloat decode[] = {0.0, 1.0};

maskRef = CGImageMaskCreate(maskedRep.pixelsWide,

maskedRep.pixelsHigh,

8,

maskedRep.bitsPerPixel,

maskedRep.bytesPerRow,

provider,

decode,

false);

NSImage *testImage = [[NSImage alloc] initWithCGImage:maskRef size:NSMakeSize(1280,1185)]; //the size of the image, hard-coded for testing

NSLog(@"testimage: %@", testImage);

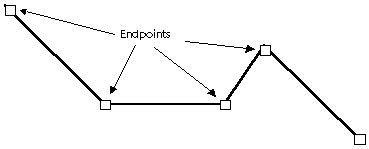

The problem is when I look at testImage, all of the pixels are slightly offset to the right from the original image.

It's much easier to see if you save the pictures off, but you'll notice that everything in testImage is offset to the right by about 5 pixels or so. You'll see a white gap to the left of the black content in testImage

Somewhere in my 5 lines of code I am somehow moving my image over. Does anybody have any idea how this could be happening? I'm currently suspecting TIFFRepresentation