I've been playing around with the enhanced samples and read the full SDK documentation but I'm can't figure out this problem. I'm trying to convert a touch on the self.cameraView to a ARVector3 so I can move the 3D model to that position. At the moment I'm trying to convert the CGPoint from the tapGesture to the world ARNode but no luck so far.

float x = [gesture locationInView:self.cameraView].x;

float y = [gesture locationInView:self.cameraView].y;

CGPoint gesturePoint = CGPointMake(x, y);

ARVector3 *newPosition = [arbiTrack.world nodeFromViewPort:gesturePoint];

ARNode *touchNode = [ARNode nodeWithName:@"touchNode"];

ARImageNode *targetImageNode = [[ARImageNode alloc] initWithImage:[UIImage imageNamed:@"drop.png"]];

[touchNode addChild:targetImageNode];

[targetImageNode scaleByUniform:1];

touchNode.position = newPosition;

[arbiTrack.world addChild:touchNode];

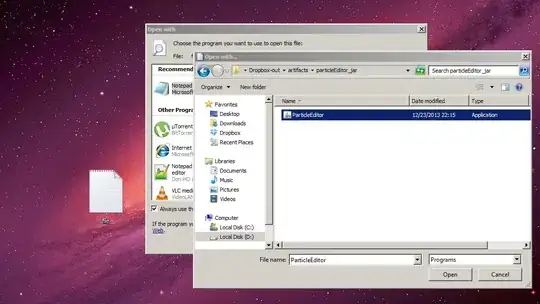

This results in the following situation:

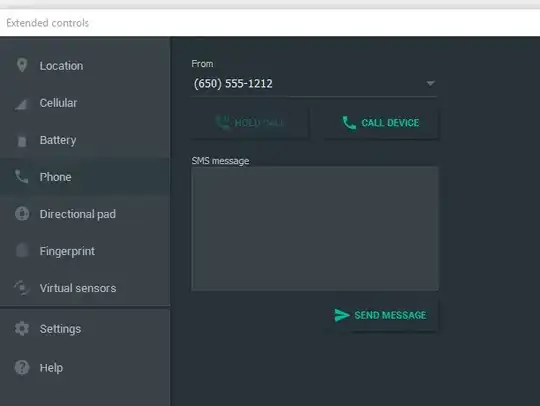

And seen on my iPhone:

Why is a touch in the upper left corner of the self.cameraView a point that is closest by? I actually want to click on the screen and get a X, Y, Z (ARVector3) coordinate back.