I have a 9.6GB csv file from which I would like to create an on-disk splayed table.

When I run this code, my 32-bit q process (on Win 10, 16GB RAM machine) runs out of memory ('wsfull) and crashes after creating an incomplete 4.68GB splayed table (see the screenshot).

path:{` sv (hsym x 0), 1_x}

symh: {`$1_ string x}

colnames: `ric`open`high`low`close`volume`date

dir: `:F:

db: `db

tbl: `ohlcv

tbldisk: path dir,db,tbl

tblsplayed: path dir,db,tbl,`

dbsympath: symh path dir,db

csvpath: `:F:/prices.csv

.Q.fs[{ .[ tblsplayed; (); ,; .Q.en[path dir,db] flip colnames!("SFFFFID";",")0:x]}] csvpath

What exactly is going on in the memory and on the disk behind the scene when reading the csv file with .Q.fs and 0:? Is the csv read row by row or column by column?

I thought that only the 132kB chunks are held in the memory at any given time, hoping that .Q.fs is 'wsfull resistant.

Is the q process actually taking in the whole column (splay) into memory, one at a time, as it increments the chunks?

Considering that: (according to this source, among others):

on 32-bit systems the main memory OLTP portion of a database is limited to about 1GB of raw data, i.e. 1/4 of the address space

that would nearly explain running out of memory. As shown on this screenshot taken right after the 'wsfull, couple of columns are near the 1GB limit.

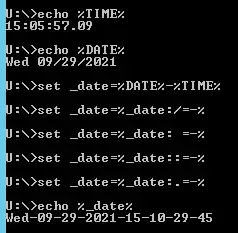

Here is a run with memory profiling:

.Q.fs[{ 0N!.Q.w[]; .[ tblsplayed; (); ,; .Q.en[path dir,db] flip colnames!("SFFFFID";",")0:x]}] csvpath