We ran into the same confusion. Avg, Min, Max spreads the calculation across all nodes and Sum combines the Free/Used space for the whole cluster.

We had assumed that Average FreeStorageSpace means average free storage space of the whole cluster and set an alarm keeping the following calculation in mind:

- Per day index = 1 TB

- Max days to keep indices = 10

Hence we had an average utilization of 10 TB at any point of time. Assuming, we will go 2x - i.e. 20 TB our actual storage need as per https://docs.aws.amazon.com/elasticsearch-service/latest/developerguide/sizing-domains.html#aes-bp-storage was

with replication factor of 2 is:

(20 * 2 * 1.1 / 0.95 / 0.8) = 57.89 =~ 60 TB

So we provisioned 18 X 3.8 TB instances =~ 68 TB to accomodated 2x = 60 TB

So we had set an alarm that if we go below 8 TB free storage - it means we have hit our 2x limit and should scale up. Hence we set the alarm

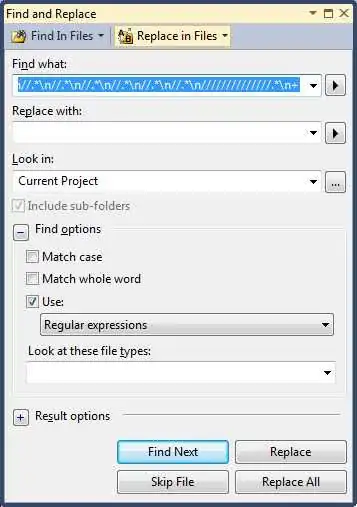

FreeStorageSpace <= 8388608.00 for 4 datapoints within 5 minutes + Statistic=Average + Duration=1minute

FreeStorageSpace is in MB hence - 8 TB = 8388608 MB.

But we immediately got alerted because our average utilization per node was below 8 TB.

After realizing that to get accurate storage you need to do FreeStorageSpace sum for 1 min - we set the alarm as

FreeStorageSpace <= 8388608.00 for 4 datapoints within 5 minutes + Statistic=Sum + Duration=1minute

The above calculation checked out and we were able to set the right alarms.

The same applies for ClusterUsedSpace calculation.

You should also track the actual free space percent using Cloudwatch Math: