I am implementing a pattern search algorithm that has a vital if statement that seems to be unpredictable in it's result. Random files are searched and thus sometimes the branch predictions are okay and sometimes they can be terrible if the file is completely random. My goal is to eliminate the if statement and I have tried but it has yielded slow results like preallocating a vector. The number of pattern possibilities can be very large so preallocating takes up a lot of time. I therefore have the dynamic vector where I initialize them all with NULL up front and then check with the if statement if a pattern is present. The if seems to be killing me and specifically the cmp assembly statement. Bad branch predictions are scrapping the pipeline a lot and causing huge slow downs. Any ideas would be great as to eliminate the if statement at line 17...stuck in a rut.

for (PListType i = 0; i < prevLocalPListArray->size(); i++)

{

vector<vector<PListType>*> newPList(256, NULL);

vector<PListType>* pList = (*prevLocalPListArray)[i];

PListType pListLength = (*prevLocalPListArray)[i]->size();

PListType earlyApproximation = ceil(pListLength/256);

for (PListType k = 0; k < pListLength; k++)

{

//If pattern is past end of string stream then stop counting this pattern

if ((*pList)[k] < file->fileStringSize)

{

uint8_t indexer = ((uint8_t)file->fileString[(*pList)[k]]);

if(newPList[indexer] != NULL) //Problem if statement!!!!!!!!!!!!!!!!!!!!!

{

newPList[indexer]->push_back(++(*pList)[k]);

}

else

{

newPList[indexer] = new vector<PListType>(1, ++(*pList)[k]);

newPList[indexer]->reserve(earlyApproximation);

}

}

}

//Deallocate or stuff patterns in global list

for (int z = 0; z < newPList.size(); z++)

{

if(newPList[z] != NULL)

{

if (newPList[z]->size() >= minOccurrence)

{

globalLocalPListArray->push_back(newPList[z]);

}

else

{

delete newPList[z];

}

}

}

delete (*prevLocalPListArray)[i];

}

Here is the code without indirection with the changes proposed...

vector<vector<PListType>> newPList(256);

for (PListType i = 0; i < prevLocalPListArray.size(); i++)

{

const vector<PListType>& pList = prevLocalPListArray[i];

PListType pListLength = prevLocalPListArray[i].size();

for (PListType k = 0; k < pListLength; k++)

{

//If pattern is past end of string stream then stop counting this pattern

if (pList[k] < file->fileStringSize)

{

uint8_t indexer = ((uint8_t)file->fileString[pList[k]]);

newPList[indexer].push_back((pList[k] + 1));

}

else

{

totalTallyRemovedPatterns++;

}

}

for (int z = 0; z < 256; z++)

{

if (newPList[z].size() >= minOccurrence/* || (Forest::outlierScans && pList->size() == 1)*/)

{

globalLocalPListArray.push_back(newPList[z]);

}

else

{

totalTallyRemovedPatterns++;

}

newPList[z].clear();

}

vector<PListType> temp;

temp.swap(prevLocalPListArray[i]);

}

Here is the most up to date program that manages to not use 3 times the memory and does not require an if statement. The only bottleneck seems to be the newPList[indexIntoFile].push_back(++index); statement. This bottleneck could be cache coherency issues when indexing the array because the patterns are random. When i search a binary files with just 1s and 0s I don't have any latency with indexing the push back statement. That is why I believe it is cache thrashing. Do you guys see any room for optimization in this code still? You guys have been a great help so far. @bogdan @harold

vector<PListType> newPList[256];

PListType prevPListSize = prevLocalPListArray->size();

PListType indexes[256] = {0};

PListType indexesToPush[256] = {0};

for (PListType i = 0; i < prevPListSize; i++)

{

vector<PListType>* pList = (*prevLocalPListArray)[i];

PListType pListLength = (*prevLocalPListArray)[i]->size();

if(pListLength > 1)

{

for (PListType k = 0; k < pListLength; k++)

{

//If pattern is past end of string stream then stop counting this pattern

PListType index = (*pList)[k];

if (index < file->fileStringSize)

{

uint_fast8_t indexIntoFile = (uint8_t)file->fileString[index];

newPList[indexIntoFile].push_back(++index);

indexes[indexIntoFile]++;

}

else

{

totalTallyRemovedPatterns++;

}

}

int listLength = 0;

for (PListType k = 0; k < 256; k++)

{

if( indexes[k])

{

indexesToPush[listLength++] = k;

}

}

for (PListType k = 0; k < listLength; k++)

{

int insert = indexes[indexesToPush[k]];

if (insert >= minOccurrence)

{

int index = globalLocalPListArray->size();

globalLocalPListArray->push_back(new vector<PListType>());

(*globalLocalPListArray)[index]->insert((*globalLocalPListArray)[index]->end(), newPList[indexesToPush[k]].begin(), newPList[indexesToPush[k]].end());

indexes[indexesToPush[k]] = 0;

newPList[indexesToPush[k]].clear();

}

else if(insert == 1)

{

totalTallyRemovedPatterns++;

indexes[indexesToPush[k]] = 0;

newPList[indexesToPush[k]].clear();

}

}

}

else

{

totalTallyRemovedPatterns++;

}

delete (*prevLocalPListArray)[i];

}

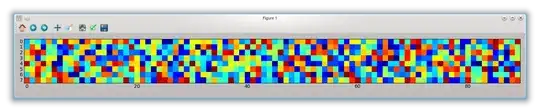

Here are the benchmarks. I didn't think it would be readable in the comments so I am placing it in the answer category. The percentages to the left define how much time is spent percentage wise on a line of code.