I came across below problem related to Matrix Manipulation.

problem statement

There is a NxN matrix,divided into N * N cells. Each cell has a predefined value. Which would be given as an input. Iteration has to happen K number of times which is also given in the test input. We have to make sure that we pick the optimum/min value of rows/columns at each iteration. Final output is the cumulative sum of optimum value saved at the end of each iteration.

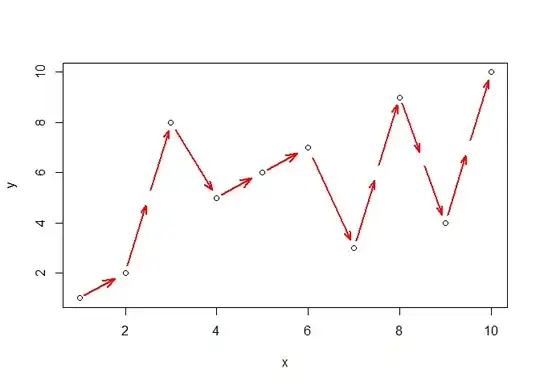

Steps 1. Sum up the individual row and column and find the min sum of rows and columns, (it could be a row or a column, just need the minimum row or a column)

Step 2. Store the sum found above separately

Step 3. Increment elements of the min. sum row or column. by 1

Repeat steps 1,2,3 from 1 to Kth value

add the sum at each iteration(specified in step2)

output is the sum obtained on on the Kth iteration.

Sample data

2 4

1 3

2 4

Output data

22

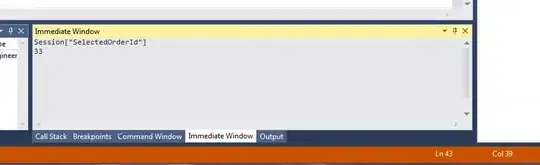

I was able to write a code (in java) and tested the same for some sample test cases. The output worked fine. The code works fine for sample data matrix of lower order, say, 2x2,4x4,even till 44x40 (that has less iteration). However, when the matrix size is increased to 100X100 (complex iteration), I see the expected output output values differ at 10s and hundreds place of the digit from the actual output and its random. Since I am not able to find a correct pattern of output vs input. Now, it is taking a toll on me to really debugging 500th loop to identify the issue. Is there any better way or approach to solve such problem related to huge matrix manipulation. Has anyone come across issues similar to this and solved it.

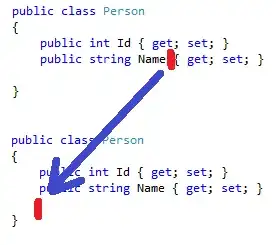

I am mainly interested in knowing the correct approach to solve given matrix problem. What Data structure to use in java. At present, I am using primitive DS and arrays int[] or long[] to solve this problem. Appreciate any help in this regard.