Part of self-learning I am exploring Oozie, and I am practicing on Hortonworks Sandbox VM. The problem is that Oozie workflow is getting error and getting killed as a result when underlying job given by the link in Oozie UI shows success.

I have looked at this question and have included

<job-xml>hive-site.xml</job-xml>

in the job description, and have copied hive-site.xml to HDFS to the correct folder but to no avail. Additionally, I have double checked all URLs and everything is right.

I am running the Oozie job from command line. I have no idea where to start debugging or how to get a more detailed error. Following are screenshots:

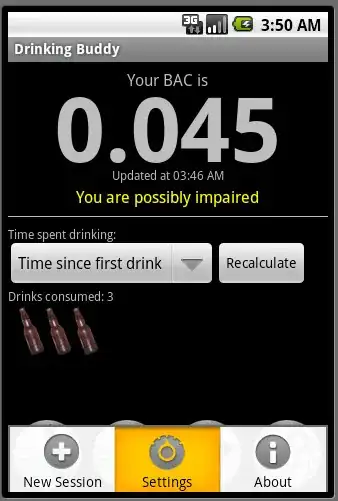

Oozie Error

Underlying

Underlying Hive job indicates successful completion.

I do not see the final result as hive table as I am supposed to see.

Following is the log output of the Map task:

<<< Invocation of Hive command completed <<<

Hadoop Job IDs executed by Hive:

Intercepting System.exit(12)

<<< Invocation of Main class completed <<<

Failing Oozie Launcher, Main class [org.apache.oozie.action.hadoop.HiveMain], exit code [12]

Oozie Launcher failed, finishing Hadoop job gracefully

Oozie Launcher, uploading action data to HDFS sequence file: hdfs://sandbox.hortonworks.com:8020/user/root/oozie-oozi/0000005-160711211729704-oozie-oozi-W/define_congress_table--hive/action-data.seq

2016-07-12 05:30:57,817 INFO [main] zlib.ZlibFactory (ZlibFactory.java:<clinit>(49)) - Successfully loaded & initialized native-zlib library

2016-07-12 05:30:57,818 INFO [main] compress.CodecPool (CodecPool.java:getCompressor(153)) - Got brand-new compressor [.deflate]

Oozie Launcher ends

2016-07-12 05:30:57,836 INFO [main] mapred.Task (Task.java:done(1038)) - Task:attempt_1468271868299_0037_m_000000_0 is done. And is in the process of committing

2016-07-12 05:30:57,878 INFO [main] mapred.Task (Task.java:commit(1199)) - Task attempt_1468271868299_0037_m_000000_0 is allowed to commit now

2016-07-12 05:30:57,887 INFO [main] output.FileOutputCommitter (FileOutputCommitter.java:commitTask(582)) - Saved output of task 'attempt_1468271868299_0037_m_000000_0' to hdfs://sandbox.hortonworks.com:8020/user/root/oozie-oozi/0000005-160711211729704-oozie-oozi-W/define_congress_table--hive/output/_temporary/1/task_1468271868299_0037_m_000000

2016-07-12 05:30:57,936 INFO [main] mapred.Task (Task.java:sendDone(1158)) - Task 'attempt_1468271868299_0037_m_000000_0' done.

Log Type: syslog

Log Upload Time: Tue Jul 12 05:31:05 +0000 2016

Log Length: 2781

2016-07-12 05:30:48,083 WARN [main] org.apache.hadoop.metrics2.impl.MetricsConfig: Cannot locate configuration: tried hadoop-metrics2-maptask.properties,hadoop-metrics2.properties

2016-07-12 05:30:48,151 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Scheduled snapshot period at 10 second(s).

2016-07-12 05:30:48,152 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: MapTask metrics system started

2016-07-12 05:30:48,163 INFO [main] org.apache.hadoop.mapred.YarnChild: Executing with tokens:

2016-07-12 05:30:48,163 INFO [main] org.apache.hadoop.mapred.YarnChild: Kind: mapreduce.job, Service: job_1468271868299_0037, Ident: (org.apache.hadoop.mapreduce.security.token.JobTokenIdentifier@1fbe7534)

2016-07-12 05:30:48,212 INFO [main] org.apache.hadoop.mapred.YarnChild: Kind: RM_DELEGATION_TOKEN, Service: 10.0.2.15:8050, Ident: (owner=root, renewer=oozie mr token, realUser=oozie, issueDate=1468301434802, maxDate=1468906234802, sequenceNumber=22, masterKeyId=90)

2016-07-12 05:30:48,257 INFO [main] org.apache.hadoop.mapred.YarnChild: Sleeping for 0ms before retrying again. Got null now.

2016-07-12 05:30:48,496 INFO [main] org.apache.hadoop.mapred.YarnChild: mapreduce.cluster.local.dir for child: /hadoop/yarn/local/usercache/root/appcache/application_1468271868299_0037

2016-07-12 05:30:48,955 INFO [main] org.apache.hadoop.conf.Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id

2016-07-12 05:30:49,414 INFO [main] org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter: File Output Committer Algorithm version is 1

2016-07-12 05:30:49,414 INFO [main] org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2016-07-12 05:30:49,423 INFO [main] org.apache.hadoop.mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2016-07-12 05:30:49,475 WARN [main] org.apache.hadoop.yarn.util.ProcfsBasedProcessTree: Unexpected: procfs stat file is not in the expected format for process with pid 4558

2016-07-12 05:30:49,647 INFO [main] org.apache.hadoop.mapred.MapTask: Processing split: org.apache.oozie.action.hadoop.OozieLauncherInputFormat$EmptySplit@1f16b6e6

2016-07-12 05:30:49,654 INFO [main] org.apache.hadoop.mapred.MapTask: numReduceTasks: 0

2016-07-12 05:30:49,700 INFO [main] org.apache.hadoop.conf.Configuration.deprecation: mapred.job.id is deprecated. Instead, use mapreduce.job.id

2016-07-12 05:30:50,069 INFO [main] org.apache.hadoop.conf.Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name

2016-07-12 05:30:50,253 INFO [main] org.apache.hadoop.yarn.client.RMProxy: Connecting to ResourceManager at sandbox.hortonworks.com/10.0.2.15:8050