Consider When Spark is not Lazy..

For Example : we are having 1GB file loaded into memory from the HDFS

We are having the transformation like

rdd1 = load file from HDFS

rdd1.println(line1)

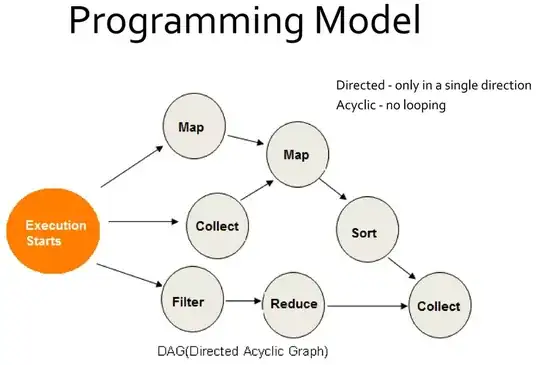

In this case when the 1st line is executed entry would be made to the DAG and 1GB file would be loaded to memory. In the second line the disaster is that just to print the line1 of the file the entire 1GB file is loaded onto memory.

Consider When Spark is Lazy

rdd1 = load file from HDFS

rdd1.println(line1)

In this case 1st line executed anf entry is made to the DAG and entire execution plan is built. And spark does the internal optimization. Instead of loading the entire 1GB file only 1st line of the file loaded and printed..

This helps avoid too much of computation and makes way for optimization.