I try to develop an example of sobel with cudaStream. Here is the program:

void SobelStream(void)

{

cv::Mat imageGrayL2 = cv::imread("/home/xavier/Bureau/Image1.png",0);

u_int8_t *u8_PtImageHost;

u_int8_t *u8_PtImageDevice;

u_int8_t *u8_ptDataOutHost;

u_int8_t *u8_ptDataOutDevice;

u_int8_t u8_Used[NB_STREAM];

u8_ptDataOutHost = (u_int8_t *)malloc(WIDTH*HEIGHT*sizeof(u_int8_t));

checkCudaErrors(cudaMalloc((void**)&u8_ptDataOutDevice,WIDTH*HEIGHT*sizeof(u_int8_t)));

u8_PtImageHost = (u_int8_t *)malloc(WIDTH*HEIGHT*sizeof(u_int8_t));

checkCudaErrors(cudaMalloc((void**)&u8_PtImageDevice,WIDTH*HEIGHT*sizeof(u_int8_t)));

cudaChannelFormatDesc channelDesc = cudaCreateChannelDesc<unsigned char>();

checkCudaErrors(cudaMallocArray(&Array_PatchsMaxDevice, &channelDesc,WIDTH,HEIGHT ));

checkCudaErrors(cudaBindTextureToArray(Image,Array_PatchsMaxDevice));

dim3 threads(BLOC_X,BLOC_Y);

dim3 blocks(ceil((float)WIDTH/BLOC_X),ceil((float)HEIGHT/BLOC_Y));

ClearKernel<<<blocks,threads>>>(u8_ptDataOutDevice,WIDTH,HEIGHT);

int blockh = HEIGHT/NB_STREAM;

Stream = (cudaStream_t *) malloc(NB_STREAM * sizeof(cudaStream_t));

for (int i = 0; i < NB_STREAM; i++)

{

checkCudaErrors(cudaStreamCreate(&(Stream[i])));

}

// for(int i=0;i<NB_STREAM;i++)

// {

// cudaSetDevice(0);

// cudaStreamCreate(&Stream[i]);

// }

cudaEvent_t Start;

cudaEvent_t Stop;

cudaEventCreate(&Start);

cudaEventCreate(&Stop);

cudaEventRecord(Start, 0);

//////////////////////////////////////////////////////////

for(int i=0;i<NB_STREAM;i++)

{

if(i == 0)

{

int localHeight = blockh;

checkCudaErrors(cudaMemcpy2DToArrayAsync( Array_PatchsMaxDevice,

0,

0,

imageGrayL2.data,//u8_PtImageDevice,

WIDTH,

WIDTH,

blockh,

cudaMemcpyHostToDevice ,

Stream[i]));

dim3 threads(BLOC_X,BLOC_Y);

dim3 blocks(ceil((float)WIDTH/BLOC_X),ceil((float)localHeight/BLOC_Y));

SobelKernel<<<blocks,threads,0,Stream[i]>>>(u8_ptDataOutDevice,0,WIDTH,localHeight-1);

checkCudaErrors(cudaGetLastError());

u8_Used[i] = 1;

}else{

int ioffsetImage = WIDTH*(HEIGHT/NB_STREAM );

int hoffset = HEIGHT/NB_STREAM *i;

int hoffsetkernel = HEIGHT/NB_STREAM -1 + HEIGHT/NB_STREAM* (i-1);

int localHeight = min(HEIGHT - (blockh*i),blockh);

//printf("hoffset: %d hoffsetkernel %d localHeight %d rest %d ioffsetImage %d \n",hoffset,hoffsetkernel,localHeight,HEIGHT - (blockh +1 +blockh*(i-1)),ioffsetImage*i/WIDTH);

checkCudaErrors(cudaMemcpy2DToArrayAsync( Array_PatchsMaxDevice,

0,

hoffset,

&imageGrayL2.data[ioffsetImage*i],//&u8_PtImageDevice[ioffset*i],

WIDTH,

WIDTH,

localHeight,

cudaMemcpyHostToDevice ,

Stream[i]));

u8_Used[i] = 1;

if(HEIGHT - (blockh +1 +blockh*(i-1))<=0)

{

break;

}

}

}

///////////////////////////////////////////

for(int i=0;i<NB_STREAM;i++)

{

if(i == 0)

{

int localHeight = blockh;

dim3 threads(BLOC_X,BLOC_Y);

dim3 blocks(1,1);

SobelKernel<<<blocks,threads,0,Stream[i]>>>(u8_ptDataOutDevice,0,WIDTH,localHeight-1);

checkCudaErrors(cudaGetLastError());

u8_Used[i] = 1;

}else{

int ioffsetImage = WIDTH*(HEIGHT/NB_STREAM );

int hoffset = HEIGHT/NB_STREAM *i;

int hoffsetkernel = HEIGHT/NB_STREAM -1 + HEIGHT/NB_STREAM* (i-1);

int localHeight = min(HEIGHT - (blockh*i),blockh);

dim3 threads(BLOC_X,BLOC_Y);

dim3 blocks(1,1);

SobelKernel<<<blocks,threads,0,Stream[i]>>>(u8_ptDataOutDevice,hoffsetkernel,WIDTH,localHeight);

checkCudaErrors(cudaGetLastError());

u8_Used[i] = 1;

if(HEIGHT - (blockh +1 +blockh*(i-1))<=0)

{

break;

}

}

}

///////////////////////////////////////////////////////

for(int i=0;i<NB_STREAM;i++)

{

if(i == 0)

{

int localHeight = blockh;

checkCudaErrors(cudaMemcpyAsync(u8_ptDataOutHost,u8_ptDataOutDevice,WIDTH*(localHeight-1)*sizeof(u_int8_t),cudaMemcpyDeviceToHost,Stream[i]));

u8_Used[i] = 1;

}else{

int ioffsetImage = WIDTH*(HEIGHT/NB_STREAM );

int hoffset = HEIGHT/NB_STREAM *i;

int hoffsetkernel = HEIGHT/NB_STREAM -1 + HEIGHT/NB_STREAM* (i-1);

int localHeight = min(HEIGHT - (blockh*i),blockh);

checkCudaErrors(cudaMemcpyAsync(&u8_ptDataOutHost[hoffsetkernel*WIDTH],&u8_ptDataOutDevice[hoffsetkernel*WIDTH],WIDTH*localHeight*sizeof(u_int8_t),cudaMemcpyDeviceToHost,Stream[i]));

u8_Used[i] = 1;

if(HEIGHT - (blockh +1 +blockh*(i-1))<=0)

{

break;

}

}

}

for(int i=0;i<NB_STREAM;i++)

{

cudaStreamSynchronize(Stream[i]);

}

cudaEventRecord(Stop, 0);

cudaEventSynchronize(Start);

cudaEventSynchronize(Stop);

float dt_ms;

cudaEventElapsedTime(&dt_ms, Start, Stop);

printf("dt_ms %f \n",dt_ms);

}

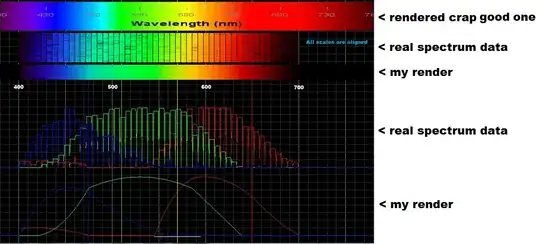

I had a really strange performance on th execution of my program. I decided to profile my example and I get that:

I don't understand it seems that each stream are waiting each other. Can someone help me about that?