I am student and doing computer science. As a part of my research i am working on the hadoop environment. The person who was working on this research before me has configured 9 Datanode with a namenode and a stand by node. we have our network traffic data stored in the hive and i am developing hive queries to identify network attack. The person who was working on this already left from our place and working somewhere else and busy with job. so i have couple of questions :

1) how can I understand the architecture on HDFS of my environment i.e how the machines are connected to build this environment. Also what services for this environment installed on which machines?

2) Now we have 9 datanodes in the environement and my professor wants to reduce the datanodes. her goal is to do the research with 2-3 (minimal) machine in this environment.

3) What are the good and easy source to get understanding about the cloudera and hadoop ? Also the commands which can be used to explicitly start and stop a service.

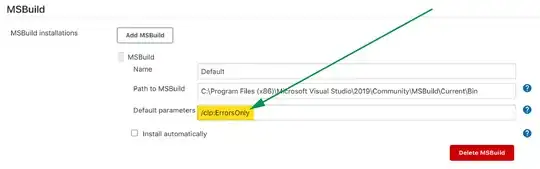

4) Right now in cloudera manager I am not able to start the Namenode server, Secondary datanode and one more. I stop all the services in order from cloudera and now starting in order and in that order the HDFS service comes first so while starting it, it gives the failure message for namenode datanode and datanode8.

I tried several ways but no luck. Please suggest me some ways I can solve issues and good resource(for beginner), I can refer to dig into this more.

Thanks.