I would have wrote this in a comment but you know I am new to contributing after using for some many years. Anyway, your option 1 of just having redundancy data flows with a condition of existence of Checksum line is probably the most straight forward approach and seeing your data set columns are limited may be fastest to create and maintain. For that approach you could put in a Foreach loop container set it up to enumerate the files. Add a variable for testing whether the checksum is there. Add a c# script task to test for checksum and populate variable. Then add 2 dataflow tasks 1 to handle the one format and the second the alternative format. It would only be a duplication of 4 components (FileConnection, Dataflow, source, destination). Then for the precedence constraint editor (double click green arrow) change to evaluate by Expression and Constraint in the Expression simply choose your variable or !variable (opposite) depending on which data flow is being fed. This is assuming you are going to stagging without first combining all 2000+ files in the same dataflow task with unions etc. There is definitely a lot of cross over to @Shiva answer but I don't see the need for a conditional split of union all if you are going to a staging table.

On a different note if you really want no redundancy of data flow tasks etc. There is no need to do the powershell script before running the SSIS package. You can create a c# script to do it right in the package. For example I have a problem where line endings are not consistent from a data source that I must import. So I have a c# script to normalize the line endings saving the file prior to my data flow task. you could normalize your file to one structure on the fly in the ssis package but it will take additional syste resources as you point out however the it will be at a time the file is already being loaded into memory/processed.

@xpil, 15 ish different types wasn't in the original question. I would probably do 1 of 2 things then. First implement your idea for #2 but do it inside of an SSIS script. So I would strip out the unwanted lines via a system.file.io operation. Then I would build out all of the different types and set a variable in the script as well to tell you which type it is or fail through different dfts. Or I would actually just script the entire operation, I might or might not use SSIS but I would just have a system.file.io to load the file detect existence and type and then simply use SQLBulkCopy to put it into the table right then rather than creating DFTs is would be less time consuming on setup. Though if the files are large few hundred MB you might still want to go the DFT route. Here is a few snippets from a SSIS script task I wrote that could help of course you will need to alter for your purposes.

If going going fix file and DFT route.

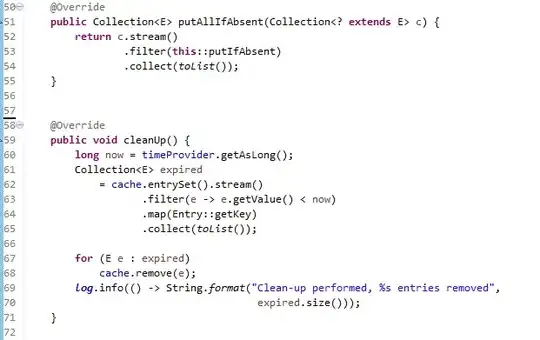

string contents = File.ReadAllText(_WFRFullPath);

contents = Regex.Replace(contents, @"\r\n?|\n", crlf);

File.WriteAllText(_SSISWorkspaceFullPath, contents);

Read file contents fix via regex write file back to new location.

If going load via script route you will then just need to read it to a datatable then test format probably by column names or data types. then load it.

SqlConnection sqlConnection = new SqlConnection(sqlConnectionString);

sqlConnection.Open();

SqlBulkCopy bulkCopy = new SqlBulkCopy(sqlConnection);

bulkCopy.DestinationTableName = _stagingTableName;

foreach (DataColumn col in _jobRecDT.Columns)

{

//System.Windows.Forms.MessageBox.Show(col.ColumnName);

bulkCopy.ColumnMappings.Add(col.ColumnName, col.ColumnName);

}

bulkCopy.WriteToServer(_jobRecDT);

sqlConnection.Close();

Note I don't have the code of it off hand but if you have large files you can actually implement a stream reader and chunk the file and bulkcopy up.