I have created the Scan Algorithm for CUDA from scratch and trying to use it for smaller data amounts less than 80,000 bytes.

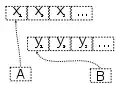

Two separate instances were created where, one runs the kernels using streams where possible and the other runs only in the default stream.

What Ive observed is that for this range of data sizes, running with streams takes longer time to complete the task compared with the other method.

When analysed using the nvprofiler, what was observed is that for smaller amount of data sizes, running in streams will not provide parallelism for separate kernals

But when the data size is increased some kind of parallelism could be obtained

My problem is, is there some additional parameters to reduce this kernel invoking time delays or is it normal to have this kind of behavior for smaller data sizes where using streams are disadvantageous

UPDATE :

I've included the Runtime API calls timeline as well to clarify the answer