I have a set of training images consisting of binary rectangles. I need to write a program that takes in another binary image (with noise, scale, rotation and slight shifting of rectangle positions) and find the closest matching image in the training set

e.g. Input image:

Trained image: Should be matched with this

Trained image: Should not be matched with this

To my knowledge, there are 3 approaches.

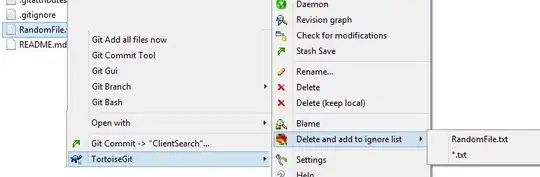

Template matching: I generate a set of scaled and rotated variants of the training image for template matching. The problem I faced with this approach is that the training images that has the highest score is always the scaled down example with most white rectangles (since a near perfect match will be found if the whitest example fits into one of the white rectangles in the input image)

Feature matching: To my understanding, feature matching relies on the fact that certain pixels (or small region of pixels) in an image is unique. However, since every edge / corner looks exactly like any other edge / corner, feature matching would fail in this case. (Please correct me if I am wrong)

Manually encode the rectangle information (e.g. orientation, position, etc.), basically creating my own descriptor for the templates and try to match. (is there a way to generate a descriptor for large images / templates?)

Can anyone advice me on how to handle this?