For one of my projects, I need to repeatedly evaluate an expression involving the general hypergeometric function. While SciPy does not support the general HypGeo function, MPMath does. However, using mp.hyper(..) is very time consuming. So instead I decided to use their fast precision library function fp.hyper(..). Unfortunately the behaviour seems to be completely different. My example below:

from mpmath import mp, fp

from math import sin, cos, pi

H = 0.2

k = 2

A = 4 * sqrt(H) / (1 + 2 * H)

B = 4 * pi / (3 + 2 * H)

C = H/2 + 3/4

f_high = lambda t: (B * k * t * sin(pi * k) *

mp.hyper([1], [C+1/2, C+1], -(k*pi*t)**2) +

cos(pi * k) * mp.hyper([1], [C, C + 1/2],

-(k*pi*t)**2)) * A * t**(H + 1/2)

f_low = lambda t: (B * k * t * sin(pi * k) *

fp.hyper([1], [C+1/2, C+1], -(k*pi*t)**2) +

cos(pi * k) * fp.hyper([1], [C, C + 1/2],

-(k*pi*t)**2)) * A * t**(H + 1/2)

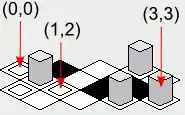

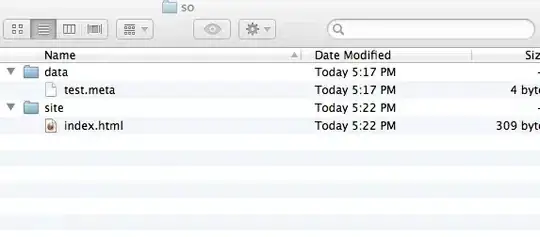

The first plot shows fp.plot(f_high,[0,1]), the second one fp.plot(f_low,[0,1]). In case anyone is wondering: The functions look ugly but one is a copy of the other, just mp replaced by fp, so there is no chance they differ in any other way.

I also plotted it in Mathematica and the picture is more like the upper one (high precision).

Looks like there is a mistake with the implementation of the fp.hyper function, right?