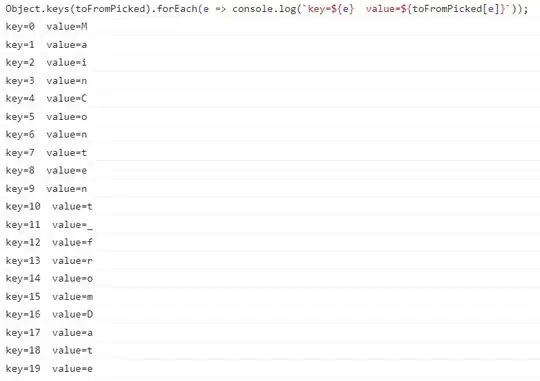

I am working on a project with audio files. I read a file and parse it. I compare my parsed values with other sources and everything seems fine. (FYI : wav file with 16 bits per sample, 44.100 Hz and 7211 sample points.)

Since my every data point is defined with 16 bits I expect my value range as [-65536, +65536]. I get -4765 as minimum and 5190 as maximum from the values I read.

But when I perform the same operation in MATLAB I get -0.07270813 and 0.079193115 respectively. This is not a big problem because it seems that MATLAB seems normalizing my values within range [-1, +1]. When I plot I get the same figure.

But when I take FFT from both applications (I am using Lomont FFT) result differ greatly and I am not sure what is wrong with my code. Lomont seems fine but the results are inconsistent.

Is this difference normal, or should I use another algorithm for this spesific operation. Can anyone suggest a better FFT algorithm (I already tried NAudio, Exocortex etc) in C# to get compliant result with MATLAB. (I suppose that their result are correct.) or any advice or suggestions on this difference?