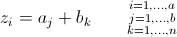

Let's say I want to estimate the camera pose for a given image I and I have a set of measurements (e.g. 2D points ui and their associated 3D coordinates Pi) for which I want to minimize the error (e.g. the sum of squared reprojection errors).

My question is: How do I compute the uncertainty on my final pose estimate ?

To make my question more concrete, consider an image I from which I extracted 2D points ui and matched them with 3D points Pi. Denoting Tw the camera pose for this image, which I will be estimating, and piT the transformation mapping the 3D points to their projected 2D points. Here is a little drawing to clarify things:

My objective statement is as follows:

There exist several techniques to solve the corresponding non-linear least squares problem, consider I use the following (approximate pseudo-code for the Gauss-Newton algorithm):

I read in several places that JrT.Jr could be considered an estimate of the covariance matrix for the pose estimate. Here is a list of more accurate questions:

- Can anyone explain why this is the case and/or know of a scientific document explaining this in details ?

- Should I be using the value of Jr on the last iteration or should the successive JrT.Jr be somehow combined ?

- Some people say that this actually is an optimistic estimate of the uncertainty, so what would be a better way to estimate the uncertainty ?

Thanks a lot, any insight on this will be appreciated.