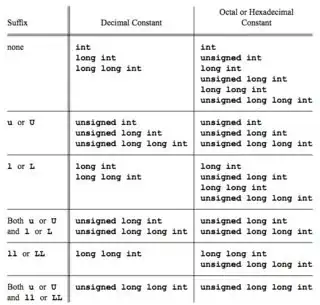

Reading Stanley Lippman's "C++ Primer", I learned that by default decimal integer literals are signed (smallest type of int, long or long long in which the literal's value fits) whereas octal and hexadecimal literals can be either signed or unsigned (smallest type of int, unsigned int, long, unsigned long, long long or unsigned long long in which the literal's value fits) .

What's the reason for treating those literals differently?

Edit: I'm trying to provide some context

int main()

{

auto dec = 4294967295;

auto hex = 0xFFFFFFFF;

return 0;

}

Debugging following code in Visual Studio shows that the type of dec is unsigned long and that the type of hex is unsigned int.

This contradicts what I've read but still: both variables represent the same value but are of different types. That's confusing me.