Similar question: Here

I am trying out TensorFlow. I generated simple data which is linearly separable and tried to fit a linear equation to it. Here is the code.

np.random.seed(2010)

n = 300

x_data = np.random.random([n, 2]).tolist()

y_data = [[1., 0.] if v[0]> 0.5 else [0., 1.] for v in x_data]

x = tf.placeholder(tf.float32, [None, 2])

W = tf.Variable(tf.zeros([2, 2]))

b = tf.Variable(tf.zeros([2]))

y = tf.sigmoid(tf.matmul(x , W) + b)

y_ = tf.placeholder(tf.float32, [None, 2])

cross_entropy = -tf.reduce_sum(y_ * tf.log(tf.clip_by_value(y, 1e-9, 1)))

train_step = tf.train.AdamOptimizer(0.01).minimize(cross_entropy)

correct_predict = tf.equal(tf.argmax(y, 1), tf.argmax(y_, 1))

accuracy = tf.reduce_mean(tf.cast(correct_predict, tf.float32))

s = tf.Session()

s.run(tf.initialize_all_variables())

for i in range(10):

s.run(train_step, feed_dict = {x: x_data, y_: y_data})

print(s.run(accuracy, feed_dict = {x: x_data, y_: y_data}))

print(s.run(accuracy, feed_dict = {x: x_data, y_: y_data}), end=",")

I get the following output:

0.536667, 0.46, 0.46, 0.46, 0.46, 0.46, 0.46, 0.46, 0.46, 0.46, 0.46

Right after the first iteration it gets struck at 0.46.

And following is the plot:

Then I changed the code to use gradient descent:

train_step = tf.train.GradientDescentOptimizer(0.01).minimize(cross_entropy)

Now i got the following: 0.54, 0.54, 0.63, 0.70, 0.75, 0.8, 0.84, 0.89, 0.92, 0.94, 0.94

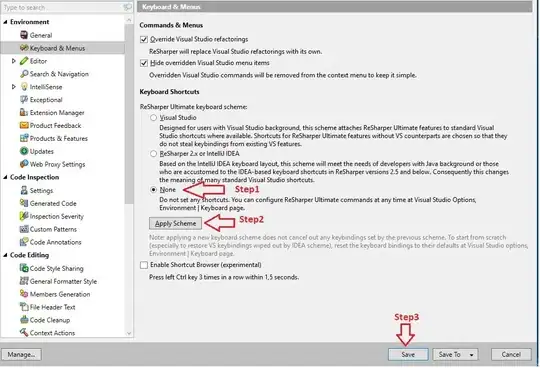

And following is the plot:

My questions:

1) Why is the AdamOptimizer failing?

2) If the issue is with learning rate, or other parameters which I need to tune, how do I generally debug them?

3) I ran gradient descent for 50 iterations (I ran for 10 above) and printed the accuracy every 5 iterations and this is the output:

0.54, 0.8, 0.95, 0.96, 0.92, 0.89, 0.87, 0.84, 0.81, 0.79, 0.77.

Clearly it started to diverge, looks like the issue is with fixed learning rate (it is overshooting after a point). Am I right?

4) In this toy example what can be done to get a better fit. Ideally it should have 1.0 accuracy as the data is linearly separable.

[EDIT]

As requested by @Yaroslav, here is the code used for plots

xx = [v[0] for v in x_data]

yy = [v[1] for v in x_data]

x_min, x_max = min(xx) - 0.5, max(xx) + 0.5

y_min, y_max = min(yy) - 0.5, max(yy) + 0.5

xxx, yyy = np.meshgrid(np.arange(x_min, x_max, 0.02), np.arange(y_min, y_max, 0.02))

pts = np.c_[xxx.ravel(), yyy.ravel()].tolist()

# ---> Important

z = s.run(tf.argmax(y, 1), feed_dict = {x: pts})

z = np.array(z).reshape(xxx.shape)

plt.pcolormesh(xxx, yyy, z)

plt.scatter(xx, yy, c=['r' if v[0] == 1 else 'b' for v in y_data], edgecolor='k', s=50)

plt.show()