I am trying to train my model with data which is 50 mb in size . I was just wondering if there is a rule/algorithm for determining the size of the dimension for the algorithm.

Asked

Active

Viewed 376 times

2

-

Possible duplicate of [Word2Vec: Number of Dimensions](https://stackoverflow.com/questions/26569299/word2vec-number-of-dimensions) – Abu Shoeb Sep 01 '18 at 04:31

1 Answers

1

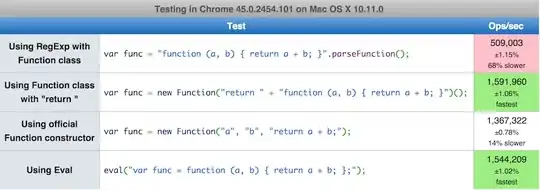

I would assume a 50mb text file as about 500,000 sentences or 5 million tokens. It's way too small to train a meaningful embedding however here is the empirical data (trained on 6Billion tokens) that you could refer to.

aerin

- 20,607

- 28

- 102

- 140