Situation: lots of heavy docker conainers that get hit periodically for a while, then stay unused for a longer period.

Wish: start the containers on demand (like systemd starts things through socket activation) and stop them after idling for given period. No visible downtime to end-user.

Options:

- Kubernetes has resource controllers which can scale replicas. I suppose it would be possible to keep the number of replicas on 0 and set it to 1 when needed, but how can one achieve that? The user guide says there is something called an auto-scaling control agent but I don't see any further information on this. Is there a pluggable, programmable agent one can use to track requests and scale based on user-defined logic?

- I don't see any solution in Docker Swarm, correct me if I'm wrong though.

- Use a custom http server written in chosen language that will have access to the docker daemon. Before routing to correct place it would check for existence of container and ensure it is running. Downside - not a general solution, has to not be a container or have access to the daemon.

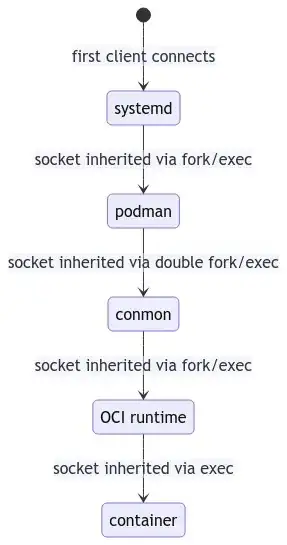

- Use systemd as described here. Same downsides as above, i.e. not general and one has to handle networking tasks themselves (like finding the IP of the spawned container and feeding it into the server/proxy's configuration).

Any ideas appreciated!