I have a beam propagation algorithm I just implemented in ArrayFire. I was using the OpenCL backend with my GTX Titan card. With OpenCL it was running at about 25,000 steps per minute. I then switched it to the CUDA backend and the performance went up to around 50,000 steps per minute. This was somewhat surprising but I figured it may be using some more advanced features not available in OpenCL. I then ran the same test using my GTX 960 card. It ran at about 22,000 steps per minute with OpenCL and 23,000 with CUDA. This is perplexing since I expected that it would follow the same pattern as the Titan. Also I think I saw the 2x performance change on my laptop using a GT 730M. I have heard that nvidia slows down OpenCL on some cards. Do they do this for the 700 series?

-

5NVIDIA doesn't slow down OpenCL on some cards. – Robert Crovella Mar 31 '16 at 01:20

-

1There are too many variables in this equation. Some code needs to be shown here. The version of arrayfire and cuda being used needs to be given. – Pavan Yalamanchili Mar 31 '16 at 13:45

-

2Also remove the speculative question from the end of your question. From our experience, all else being equal, the performance of opencl and cuda on nvidia GPUs (for kernels implemented by us) are pretty similar. The differences come from core libraries like clBLAS and clFFT which are not optimized for NVIDIA GPUS unlike cuBLAS and cuFFT. – Pavan Yalamanchili Mar 31 '16 at 13:47

-

Maybe that is it since each step requires an fft and inverse fft. – chasep255 Mar 31 '16 at 13:48

-

That makes sense. clFFT was written by AMD developers but is open and works on all OpenCL hardware. It is however more optimized for AMD GPUs. cuFFT (used for CUDA backend) is closed and written by NVIDIA. They could have done optimizations that take full utilization of their hardware. – Pavan Yalamanchili Mar 31 '16 at 13:54

-

2@chasep255 I'd have to vote to close the issue if you don't change the question to be less opinion based and more technical. Please remove any opinion based questions from your question. – Pavan Yalamanchili Mar 31 '16 at 13:57

-

@RobertCrovella I just realized your comment can be misunderstood. Perhaps change "some" to "any"? – Pavan Yalamanchili Apr 01 '16 at 02:44

-

I am negating a statement made in the question "I have heard that nvidia slows down OpenCL on some cards", but yes it would be clearer probably to say "any". – Robert Crovella Apr 01 '16 at 02:49

1 Answers

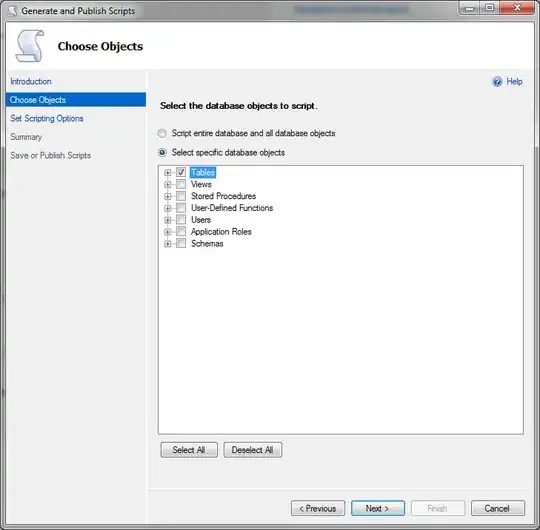

Since you did not show any code, I can only offer a profiler. For example I am using codexl of amd and all pictures are taken from an excessively synchronized convolution algorithm using brush area of 50x50 computing over a 768x768 matrix. Having lots of integers and local integer arrays and integer operations and equally many fp operations in the end, leads to some issues(work is balanced on 3 devices).

I'm sure nvidia has similar software to detect holes in your application.

- CPU time profiling per function lets you know hot spots on host side. Top 5 most time consuming functions listed here. So you can optimize host functions.(clicking one of functions brings detailed performance counters of cpu instructions per core)

- Kernel analyzing shows if there are any bottlenecks by vector units, scalar units, memory operations and many more options. Here can be seen kernel occupancy and wavefront numbers but ther are tens of other things on right hand side.

When you click on kerneloccupancy cell, you can see the source of bottleneck in detail as in below picture:

- Application timeline trace profiling exposes gaps between ndRangeKernel, read-write operation, clFinish and other API methods so you can see if there are redundant sycnhronizations, bottlenecks in multi threaded operations and memory leaks.(below picture shows holes in both vertical and horizontal directions because this example has no asynchronous operations per device and there are redundant synchronizations)

- Also a gtx 960 cannot beat a titan unless workload is big enough for 960 and small enough for titan( maybe its just api overhead caused by different device handling). It could be titan having 192 cores per unit(better for 384 - 768 threads per group) and gtx960 having 128 cores per unit (better parallelism for 256 - 512 -1024 threads per group)

25000 iterations per minute is 416 per second which is about 2.5 milliseconds per step. 2.5 milliseconds means you can transfer for only 10-40 MB at this time slice. What is size of buffers that are sent? Excessive clFinish functions cause at least hundreds of microseconds which has observable delay when kernel time is comparable(like 1-2 milliseconds)

Increase scale of executions so titan is loaded enough to achieve optimum performance (not peak).

When workload is too small, my R7-240(320 cores) outperforms my HD7870(1280 cores) because kernel occupation ratio is higher in r7 since there are more wavefronts per compute units and ALUs are fed %100(occupancy), also there are less compute units to prepare for computation(overhead) and less synchronizations in hardware(overhead). Thats why there are different categories for benchmarks such as "performance" and "extreme". Also newer architectures work closer to their peak performances.

Probably, a gtx 960 may not manage 3 x your array-fire application running at the same time(@ 15000/m) while titan may manage 8-10 x apps concurrently(@ 15000/m for example) (if you choose app level parallelism instead of simply increasing work size per step).

Edit: Example code is actually computing diffusion-like interaction. Equilibrium state of area around a circle-shaped source material:

Color banding comes from rounding fp to 0-255 range of integer for all color channels(rgba-each 1 byte, should have used floats but not enough pci-e budget)

- 11,469

- 4

- 45

- 97

-

I leave the data on the gpu between steps. I only render ever 20 to 50 steps to minimize the memory transfer overhead. Also my grid is of size 1024x1024. According to the nvidia X-Server I am using 99% of the titian. – chasep255 Mar 31 '16 at 12:14

-

3

-

from amd's site. developer page. Compute to transfer ratio is also important. – huseyin tugrul buyukisik Mar 31 '16 at 13:53

-

1@huseyintugrulbuyukisik I meant OP's application. After re-reading your answer I realize you profied *something*, not entirely sure what. Your answer is however detailed, but it probably doesn't address the issue being asked by OP. – Pavan Yalamanchili Mar 31 '16 at 19:11

-

@PavanYalamanchili yes I profiled something like 50x50 convolution on a 768x768 matrix using lots of integers and local integer arrays. Its similar to getting average of an area and adding it to center on next iteration. Its also a diffusion algorithm but not a beam. – huseyin tugrul buyukisik Mar 31 '16 at 20:01

-

@huseyintugrulbuyukisik may be add that information to your answer to for relevance ? – Pavan Yalamanchili Mar 31 '16 at 20:35

-

@PavanYalamanchili Okay, included example and output. – huseyin tugrul buyukisik Mar 31 '16 at 21:32