I'm working on a project in which I have to detect Traffic lights (circles obviously). Now I am working with a sample image I picked up from a spot, however after all my efforts I can't get the code to detect the proper circle(light).

Here is the code:-

# import the necessary packages

import numpy as np

import cv2

image = cv2.imread('circleTestsmall.png')

output = image.copy()

# Apply Guassian Blur to smooth the image

blur = cv2.GaussianBlur(image,(9,9),0)

gray = cv2.cvtColor(blur, cv2.COLOR_BGR2GRAY)

# detect circles in the image

circles = cv2.HoughCircles(gray, cv2.HOUGH_GRADIENT, 1.2, 200)

# ensure at least some circles were found

if circles is not None:

# convert the (x, y) coordinates and radius of the circles to integers

circles = np.round(circles[0, :]).astype("int")

# loop over the (x, y) coordinates and radius of the circles

for (x, y, r) in circles:

# draw the circle in the output image, then draw a rectangle

# corresponding to the center of the circle

cv2.circle(output, (x, y), r, (0, 255, 0), 4)

cv2.rectangle(output, (x - 5, y - 5), (x + 5, y + 5), (0, 128, 255), -1)

# show the output image

cv2.imshow("output", output)

cv2.imshow('Blur', blur)

cv2.waitKey(0)

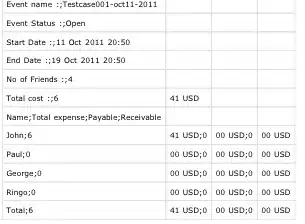

The image in which I want to detect the circle-

This is what the output image is:-

I tried playing with the Gaussian blur radius values and the minDist parameter in hough transform but didn't get much of success.

Can anybody point me in the right direction?

P.S- Some out of topic questions but crucial ones to my project-

1. My computer takes about 6-7 seconds to show the final image. Is my code bad or my computer is? My specs are - Intel i3 M350 2.6 GHz(first gen), 6GB RAM, Intel HD Graphics 1000 1625 MB.

2. Will the hough transform work on a binary thresholded image directly?

3. Will this code run fast enough on a Raspberry Pi 3 to be realtime? (I gotta mount it on a moving autonomous robot.)

Thank you!