I've got a (CMSampleBufferRef)imageBuffer which is type of yuv_nv12(4:2:0).

Now I run the following code,and find the result is confusing.

UInt8 *baseSRC = (UInt8 *)CVPixelBufferGetBaseAddress(imageBuffer);

UInt8 *yBaseSRC = (UInt8 *)CVPixelBufferGetBaseAddressOfPlane(imageBuffer, 0);

UInt8 *uvBaseSRC = (UInt8 *)CVPixelBufferGetBaseAddressOfPlane(imageBuffer, 1);

int width = (int)CVPixelBufferGetWidthOfPlane(imageBuffer, 0); //width = 480;

int height = (int)CVPixelBufferGetHeightOfPlane(imageBuffer, 0); //height = 360;

int y_base = yBaseSRC - baseSRC; //y_base = 64;

int uv_y = uvBaseSRC-yBaseSRC; //uv_y = 176640;

int delta = uv_y - width*height; //delta = 3840;

I have a few questions about this result.

1: Why isn't baseSRC equal to yBaseSRC?

2: Why isn't yBaseSRC+width*height equal to uvBaseSRC? Theoretically,y plane data is followed by uv plane data without any interruptions,right? Now it is interupted by something whose size is 3840 bytes,I don't get it.

3: I try to convert this sample pixel to cvmat with the following code,on most of iOS devices,this works properly, but not on iPhone 4s.On iPhone 4s, after conversion,the pixel buffer gets some green lines on the side.

Mat nv12Mat(height*1.5,width,CV_8UC1,(unsigned char *)yBaseSRC);

Mat rgbMat;

cvtColor(nv12Mat, rgbMat, CV_YUV2RGB_NV12);

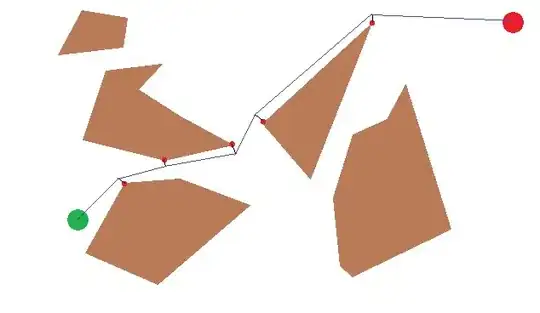

Now rgbMat looks like this: