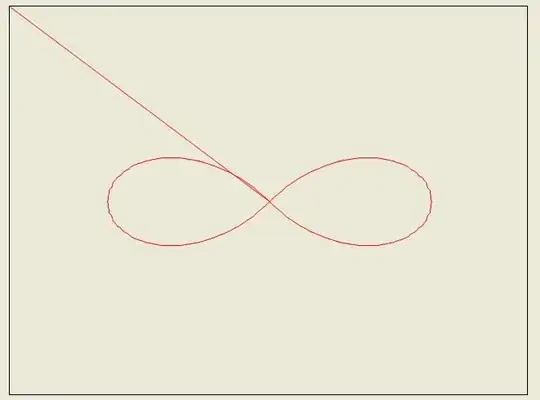

I cannot upload pics so I will try to explain my problem the best. I want to simulate the detection of a moving object by a unicycle type robot. The robot is modelled with position (x,y) and direction theta as the three states. The obstacle is represented as a circle of radius r1. I want to find the angles alpha_1 and alpha_2 from the robot's local coordinate frame to the circle, as shown here:

So what I am doing is trying to find the angle from the robot to the line joining the robot and the circle's centre (this angle is called aux_t in my code), then find the angle between the tangent and the same line (called phi_c). Finally I would find the angles I want by adding and substracting phi_c from aux_t. The diagram I am thinking of is shown:

The problem is that I am getting trouble with my code when I try to find the alpha angles: It starts calculating the angles correctly (though in negative values, not sure if this is causingmy trouble) but as both the car and the cicle get closer, phi_c becomes larger than aux_t and one of the alphas suddenly change its sign. For example I am getting this:

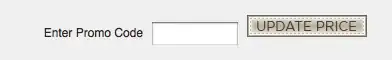

aux_t//////phi_c//////alpha_1//////alpha_2

-0.81//////+0.52//////-1.33//////-0.29

-0.74//////+0.61//////-1.35//////-0.12

-0.69//////+0.67//////-1.37//////-0.02

-0.64//////+0.74//////-1.38//////+0.1

So basically, the alpha_2 gets wrong form here. I know I am doing something wrong but I'm not sure what, I don't know how to limit the angles from 0 to pi. Is there a better way to find the alpha angles? Here is the section of my code: