I know how to generate a plane and map a texture. Now, I am trying to display an alpha-blended PNG on my form, like it was a sprite.

I have come up with the following code from Googling and guessing:

// Get the OpenGL object.

var gl = openGLControl.OpenGL;

// We need to load the texture from file.

var textureImage = Resources.Resource1.bg;

// A bit of extra initialisation here, we have to enable textures.

gl.Enable(OpenGL.GL_TEXTURE_2D);

// Get one texture id, and stick it into the textures array.

gl.GenTextures(1, textures);

// Bind the texture.

gl.BindTexture(OpenGL.GL_TEXTURE_2D, textures[0]);

gl.Enable(OpenGL.GL_BLEND);

gl.BlendFunc(OpenGL.GL_SRC_ALPHA, OpenGL.GL_DST_ALPHA);

var locked = textureImage.LockBits(

new Rectangle(0, 0, textureImage.Width, textureImage.Height),

System.Drawing.Imaging.ImageLockMode.ReadOnly,

System.Drawing.Imaging.PixelFormat.Format32bppArgb

);

gl.TexImage2D(

OpenGL.GL_TEXTURE_2D,

0,

4,

textureImage.Width,

textureImage.Height,

0,

OpenGL.GL_RGBA,

OpenGL.GL_UNSIGNED_BYTE,

locked.Scan0

);

gl.TexParameter(OpenGL.GL_TEXTURE_2D, OpenGL.GL_TEXTURE_WRAP_S, OpenGL.GL_CLAMP);

gl.TexParameter(OpenGL.GL_TEXTURE_2D, OpenGL.GL_TEXTURE_WRAP_T, OpenGL.GL_CLAMP);

gl.TexParameter(OpenGL.GL_TEXTURE_2D, OpenGL.GL_TEXTURE_MAG_FILTER, OpenGL.GL_LINEAR);

gl.TexParameter(OpenGL.GL_TEXTURE_2D, OpenGL.GL_TEXTURE_MIN_FILTER, OpenGL.GL_LINEAR);

Here is the original source image that I am using:

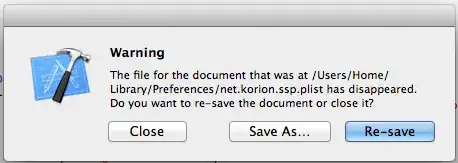

Here is the output when I render this sprite 10 × 10 (100) times on my form:

The output is all messed up, and it doesn't seem to be respecting the image's alpha channel. What changes need to be made to my code to ensure that it renders correctly?