I am working on linear regression with two-dimensional data but I cannot get the correct weights for the regression line. There seems to be a problem with the following code because the calculated weights for the regression line are not correct. Using too large data values, around 80000 for x, results in NaN for the weights. Scaling the data from 0 to 1, results in wrong weights because the regression line does not match the data.

function [w, epoch_batch, error_batch] = batch_gradient_descent(x, y)

% number of examples

q = size(x,1);

% learning rate

alpha = 1e-10;

w0 = rand(1);

w1 = rand(1);

curr_error = inf;

eps = 1e-7;

epochs = 1e100;

epoch_batch = 1;

error_batch = inf;

for epoch = 1:epochs

prev_error = curr_error;

curr_error = sum((y - (w1.*x + w0)).^2);

w0 = w0 + alpha/q * sum(y - (w1.*x + w0));

w1 = w1 + alpha/q * sum((y - (w1.*x + w0)).*x);

if ((abs(prev_error - curr_error) < eps))

epoch_batch = epoch;

error_batch = abs(prev_error - curr_error);

break;

end

end

w = [w0, w1];

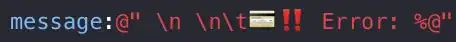

Could you tell me where I made an error because for me it seems correct after hours of trying.

Data:

x

35680

42514

15162

35298

29800

40255

74532

37464

31030

24843

36172

39552

72545

75352

18031

y

2217

2761

990

2274

1865

2606

4805

2396

1993

1627

2375

2560

4597

4871

1119

Here is the code to plot the data:

figure(1)

% plot data points

plot(x, y, 'ro');

hold on;

xlabel('x value');

ylabel('y value');

grid on;

% x vector from min to max data point

x = min(x):max(x);

% calculate y with weights from batch gradient descent

y = (w(1) + w(2)*x);

% plot the regression line

plot(x,y,'r');

The weights for the unscaled data set could be found using a smaller learning rate alpha = 1e-10.

However, when scaling the data from 0 to 1, I still have troubles to get the matching weights.

scaled_x =

0.4735

0.5642

0.2012

0.4684

0.3955

0.5342

0.9891

0.4972

0.4118

0.3297

0.4800

0.5249

0.9627

1.0000

0.2393

scaled_y_en =

0.0294

0.0366

0.0131

0.0302

0.0248

0.0346

0.0638

0.0318

0.0264

0.0216

0.0315

0.0340

0.0610

0.0646

0.0149