I need help regarding how to structure overlapping date ranges in my data warehouse. My objective is to model the data in a way that allows date-level filtering on the reports.

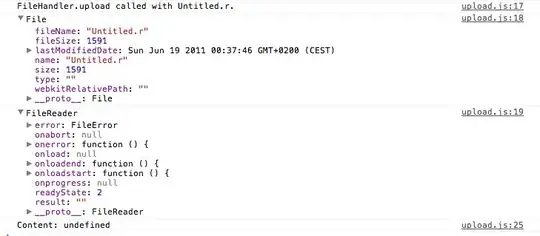

I have dimensions — DimEmployee, DimDate and a fact called FactAttendance. The records in this fact are stored as follows —

To represent this graphically —

A report needs to be created out of this data, that will allow the end-user to filter it by making a selection of a date range. Let's assume user selects date range D1 to D20. On making this selection, the user should see the value for how many days at least one of the employees was on leave. In this particular example, I should see the addition of light-blue segments in the bottom i.e. 11 days.

An approach that I am considering is to store one row per employee per date for each of the leaves. The only problem with this approach is that it will exponentially increase the number of records in the fact table. Besides, there are other columns in the fact that will have redundant data.

How are such overlapping date/time problems usually handled in a warehouse? Is there a better way that does not involve inserting numerous rows?