I use Pentaho BI server 5, but it should work same on Pentaho BI 6.

My Kettle job runs many sub-transformations. Transformation files are stored on file system directory e.g. /opt/etl.

So lets say I have one job (daily_job.kjb) with two sub-transformations.

To run a Kettle job on Pentaho BI CE I use those steps:

- set up a transformation location properly in job file

- upload sub-transformations to proper directory on server (

/opt/etl)

- create

xaction file which executes Kettle job on BI server (daily.xaction)

- upload

daily.xaction and daily_job.kjb files to Pentaho BI server (same folder)

- schedule

daily.xaction file on Pentaho BI server

Job settings in daily_job.kjb:

Xaction code daily.xaction (simply it executes daily_job.kjb located in same folder in BI server as where xaction is):

<?xml version="1.0" encoding="UTF-8"?>

<action-sequence>

<title>My scheduled job</title>

<version>1</version>

<logging-level>ERROR</logging-level>

<documentation>

<author>mzy</author>

<description>Sequence for running daily job.</description>

<help/>

<result-type/>

<icon/>

</documentation>

<inputs>

</inputs>

<outputs>

<logResult type="string">

<destinations>

<response>content</response>

</destinations>

</logResult>

</outputs>

<resources>

<job-file>

<solution-file>

<location>daily_job.kjb</location>

<mime-type>text/xml</mime-type>

</solution-file>

</job-file>

</resources>

<actions>

<action-definition>

<component-name>KettleComponent</component-name>

<action-type>Pentaho Data Integration Job</action-type>

<action-inputs>

</action-inputs>

<action-resources>

<job-file type="resource"/>

</action-resources>

<action-outputs>

<kettle-execution-log type="string" mapping="logResult"/>

<kettle-execution-status type="string" mapping="statusResult"/>

</action-outputs>

<component-definition>

<kettle-logging-level><![CDATA[info]]></kettle-logging-level>

</component-definition>

</action-definition>

</actions>

</action-sequence>

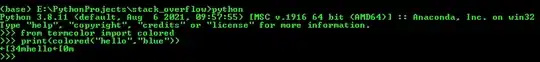

Scheduling Kettle job (xaction file) on Pentaho BI CE: