I am getting an acccess denied error from S3 AWS service on my Lambda function.

This is the code:

// dependencies

var async = require('async');

var AWS = require('aws-sdk');

var gm = require('gm').subClass({ imageMagick: true }); // Enable ImageMagick integration.

exports.handler = function(event, context) {

var srcBucket = event.Records[0].s3.bucket.name;

// Object key may have spaces or unicode non-ASCII characters.

var key = decodeURIComponent(event.Records[0].s3.object.key.replace(/\+/g, " "));

/*

{

originalFilename: <string>,

versions: [

{

size: <number>,

crop: [x,y],

max: [x, y],

rotate: <number>

}

]

}*/

var fileInfo;

var dstBucket = "xmovo.transformedimages.develop";

try {

//TODO: Decompress and decode the returned value

fileInfo = JSON.parse(key);

//download s3File

// get reference to S3 client

var s3 = new AWS.S3();

// Download the image from S3 into a buffer.

s3.getObject({

Bucket: srcBucket,

Key: key

},

function (err, response) {

if (err) {

console.log("Error getting from s3: >>> " + err + "::: Bucket-Key >>>" + srcBucket + "-" + key + ":::Principal>>>" + event.Records[0].userIdentity.principalId, err.stack);

return;

}

// Infer the image type.

var img = gm(response.Body);

var imageType = null;

img.identify(function (err, data) {

if (err) {

console.log("Error image type: >>> " + err);

deleteFromS3(srcBucket, key);

return;

}

imageType = data.format;

//foreach of the versions requested

async.each(fileInfo.versions, function (currentVersion, callback) {

//apply transform

async.waterfall([async.apply(transform, response, currentVersion), uploadToS3, callback]);

}, function (err) {

if (err) console.log("Error on excecution of watefall: >>> " + err);

else {

//when all done then delete the original image from srcBucket

deleteFromS3(srcBucket, key);

}

});

});

});

}

catch (ex){

context.fail("exception through: " + ex);

deleteFromS3(srcBucket, key);

return;

}

function transform(response, version, callback){

var imageProcess = gm(response.Body);

if (version.rotate!=0) imageProcess = imageProcess.rotate("black",version.rotate);

if(version.size!=null) {

if (version.crop != null) {

//crop the image from the coordinates

imageProcess=imageProcess.crop(version.size[0], version.size[1], version.crop[0], version.crop[1]);

}

else {

//find the bigger and resize proportioned the other dimension

var widthIsMax = version.size[0]>version.size[1];

var maxValue = Math.max(version.size[0],version.size[1]);

imageProcess=(widthIsMax)?imageProcess.resize(maxValue):imageProcess.resize(null, maxValue);

}

}

//finally convert the image to jpg 90%

imageProcess.toBuffer("jpg",{quality:90}, function(err, buffer){

if (err) callback(err);

callback(null, version, "image/jpeg", buffer);

});

}

function deleteFromS3(bucket, filename){

s3.deleteObject({

Bucket: bucket,

Key: filename

});

}

function uploadToS3(version, contentType, data, callback) {

// Stream the transformed image to a different S3 bucket.

var dstKey = fileInfo.originalFilename + "_" + version.size + ".jpg";

s3.putObject({

Bucket: dstBucket,

Key: dstKey,

Body: data,

ContentType: contentType

}, callback);

}

};

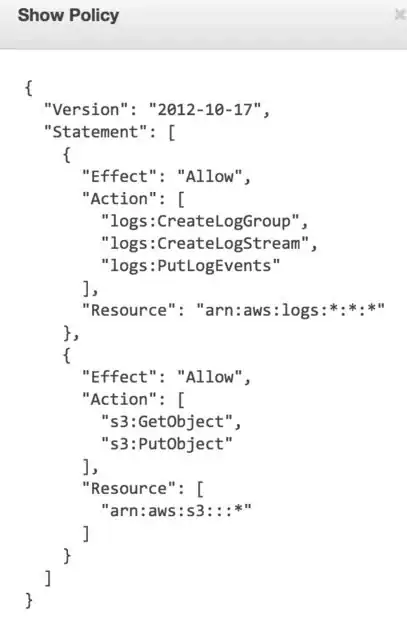

This is the error on Cloudwatch:

AccessDenied: Access Denied

This is the stack error:

at Request.extractError (/var/runtime/node_modules/aws-sdk/lib/services/s3.js:329:35)

at Request.callListeners (/var/runtime/node_modules/aws-sdk/lib/sequential_executor.js:105:20)

at Request.emit (/var/runtime/node_modules/aws-sdk/lib/sequential_executor.js:77:10)

at Request.emit (/var/runtime/node_modules/aws-sdk/lib/request.js:596:14)

at Request.transition (/var/runtime/node_modules/aws-sdk/lib/request.js:21:10)

at AcceptorStateMachine.runTo (/var/runtime/node_modules/aws-sdk/lib/state_machine.js:14:12)

at /var/runtime/node_modules/aws-sdk/lib/state_machine.js:26:10

at Request.<anonymous> (/var/runtime/node_modules/aws-sdk/lib/request.js:37:9)

at Request.<anonymous> (/var/runtime/node_modules/aws-sdk/lib/request.js:598:12)

at Request.callListeners (/var/runtime/node_modules/aws-sdk/lib/sequential_executor.js:115:18)

Without any other description or info on S3 bucket permissions allow to everyone put list and delete.

What can I do to access the S3 bucket?

PS: on Lambda event properties the principal is correct and has administrative privileges.