I'm somewhat new in the R programming world and I'm dealing with some issues related to the parallelization of the processing of (not so much) big data.

To this end, I'm using the data.table package for data storage and handling, and the snowfall package as a wrapper to parallelize the work.

I put a specific case example: I have a large vector of elements and I want to apply a function f (I use a vectorized version) on every element; then I balance the large vector into N parts (smaller vectors) like this:

sfInit(parallel = TRUE, cpus = ncpus)

balancedVector <-myVectorLoadBalanceFunction(myLargeVector, ncpus)

processedSubVectors <- sfLapply(balancedVector, function(subVector) {

myVectorizedFunction(subVector)

})

sfStop()

What I see weird is that when I run this piece of code from the command line or a script (i.e. the largeVector is in the global environment) the performance is good in terms of time and I see in the MS Windows task manager that each core seems to be using a memory amount proportional to the subVector size; but when I run the code within a function environment (i.e. calling it from the command line and passing the largeVector as argument) then the performance gets worse in terms of time and I check that each core seems now to be using a full copy of the largeVector...

Does this make sense?

Regards

EDITED to add a reproducible example

Just to keep it simple, a dummy example with a Date vector of ~300 MB with +36 M elements and a weekday function:

library(snowfall)

aSomewhatLargeVector <- seq.Date(from = as.Date("1900-01-01"), to = as.Date("2000-01-01"), by = 1)

aSomewhatLargeVector <- rep(aSomewhatLargeVector, 1000)

# Sequential version to compare

system.time(processedSubVectorsSequential <- weekdays(aSomewhatLargeVector))

# user system elapsed

# 108.05 1.06 109.53

gc() # I restarted R

# Parallel version within a function scope

myCallingFunction = function(aSomewhatLargeVector) {

sfInit(parallel = TRUE, cpus = 2)

balancedVector <- list(aSomewhatLargeVector[seq(1, length(aSomewhatLargeVector)/2)],

aSomewhatLargeVector[seq(length(aSomewhatLargeVector)/2+1, length(aSomewhatLargeVector))])

processedSubVectorsParallelFunction <- sfLapply(balancedVector, function(subVector) {

weekdays(subVector)

})

sfStop()

processedSubVectorsParallelFunction <- unlist(processedSubVectorsParallelFunction)

return(processedSubVectorsParallelFunction)

}

system.time(processedSubVectorsParallelFunction <- myCallingFunction(aSomewhatLargeVector))

# user system elapsed

# 11.63 10.61 94.27

# user system elapsed

# 12.12 9.09 99.07

gc() # I restarted R

# Parallel version within the global scope

time0 <- proc.time()

sfInit(parallel = TRUE, cpus = 2)

balancedVector <- list(aSomewhatLargeVector[seq(1, length(aSomewhatLargeVector)/2)],

aSomewhatLargeVector[seq(length(aSomewhatLargeVector)/2+1, length(aSomewhatLargeVector))])

processedSubVectorsParallel <- sfLapply(balancedVector, function(subVector) {

weekdays(subVector)

})

sfStop()

processedSubVectorsParallel <- unlist(processedSubVectorsParallel)

time1 <- proc.time()

time1-time0

# user system elapsed

# 7.94 4.75 85.14

# user system elapsed

# 9.92 3.93 89.69

My times appear in the comments, though there is no so significant difference for this dummy example, but it can be seen that sequential time > parallel within function > parallel within global

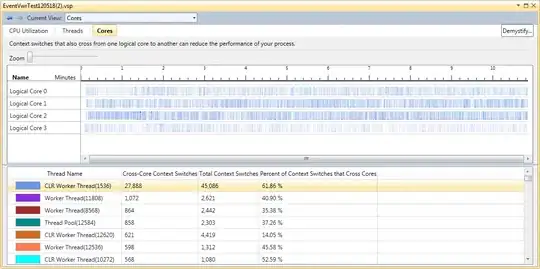

Furthemore, you can see the differences in allocated memory:

3.3 GB < 5.2 GB > 4.4 GB

Hope this helps