I have a program that reads data over serial from an ADC on a PSoC.

The numbers are sent in the format <uint16>, inclusive of the '<' and '>' symbols, transmitted in binary format 00111100 XXXXXXXX XXXXXXXX 00111110 where the 'X's make up the 16 bit unsigned int.

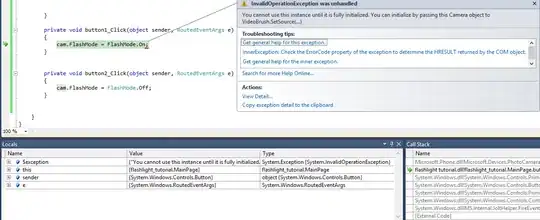

Occasionally the read won't work very well and the program uses the binary data for the '>' symbol as part of its number resulting in the glitch as shown in this screenshot of 2500 samples (ignore the drop between samples 800 to 1500, that was me playing with the ADC input):

You can clearly see that the glitch causes the data to sample roughly the same value each time it happens.

The data is sent ten times a second, so what I was planning on doing was to take ten samples, remove any glitches (where the value is far away from the other samples) and then average the remaining values to smooth out the curve a bit. The output can go anywhere from 0 to 50000+ so I can't just remove values below a certain number.

I'm uncertain how to remove the values that are a long way out of the range of the other values in the 10-sample group, because there may be instances where there are two samples that are affected by this glitch. Perhaps there's some other way of fixing this glitchy data instead of just working around it!

What is the best way of doing this? Here's my code so far (this is inside the DataReceivedEvent method):

SerialPort sp = (SerialPort)sender; //set up serial port

byte[] spBuffer = new byte[4];

int indata = 0;

sp.Read(spBuffer, 0, 4);

indata = BitConverter.ToUInt16(spBuffer, 1);

object[] o = { numSamples, nudDutyCycle.Value, freqMultiplied, nudDistance.Value, pulseWidth, indata };

lock (dt) //lock for multithread safety

{

dt.Rows.Add(o); //add data to datatable

}