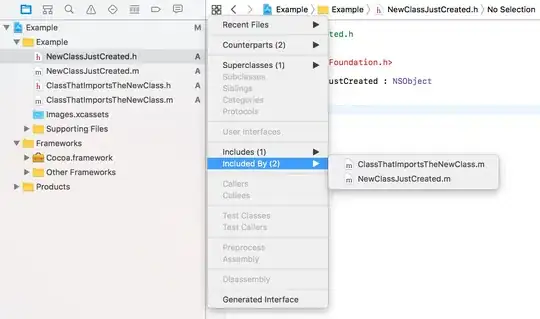

We've been asked to do 3D reconstruction (masters module for PhD), and I'm pulling my hair out. I'm not sure if I'm missing any steps, or if I've done them wrong. I've tried to google the code, and replace their functions with mine, just to see if I can get correct results from that, which I can't.

I'll just go through the steps of what I'm doing so far, and I hope one of you can tell me I'm missing something obvious:

Images I'm using: https://i.stack.imgur.com/44vdI.jpg

Load calibration left and right images, click on corresponding points to get P1 and P2

Use RQ decomp to get K1 & K2 (and R1, R2, t1, t2, but I don't seem to use them anywhere. Originally I tried doing R = R1*R2', t = t2-t1 to create my new P2 after setting P1 to be canonical (I|0), but that didn't work either).

Set P1 to be canonical (I | 0)

Calculate fundamental matrix F, and corresponding points im1, im2 using RANSAC.

Get colour of pixels at the points

Get essential matrix E by doing K2' * F * K1

Get the 4 different projection matrices from E, and then select right one

Triangulate matches using P1, P2, im1, im2 to get 3D points

Use scatter plot to plot 3D points, giving them the RGB value of the pixel at that point.

My unsatisfactory result:

At the moment, since I'm not getting ANYWHERE, I'd like to go for the simplest option and work my way up. FYI, I'm using matlab. If anyone's got any tips at all, I'd really love to hear them.