I'm using OpenCV 3.1.0 to fit a Gaussian Mixture Model to two-class data using EM. The samples are labeled, so I provide the class means and covariances during training by using EM::trainE. When I check the predicted labels, they appear to be well-fit to the data, but opposite (samples from class 1 are almost always predicted to be in class 0 and vice-versa). Here is how the model is trained:

// Run EM

Mat predicted_labels(samples.rows, 1, CV_64F);

Mat means0(EM_CLASS_COUNT, SAMPLE_DIMENSIONS, CV_64F);

const int sizes[]{ EM_CLASS_COUNT, SAMPLE_DIMENSIONS, SAMPLE_DIMENSIONS };

Mat covar0(3, sizes, CV_64F);

for (int i = 0; i < EM_CLASS_COUNT; ++i) {

calcCovarMatrix(class_samples[i], Mat(SAMPLE_DIMENSIONS, SAMPLE_DIMENSIONS, samples.type(), covar0.row(i).data), means0.row(i), COVAR_NORMAL | COVAR_ROWS);

}

Ptr<EM> model = EM::create();

model->setClustersNumber(EM_CLASS_COUNT);

model->trainE(samples, means0, covar0, noArray(), noArray(), predicted_labels);

// Print results

for (int i = 0; i < csv_data.size(); ++i) {

printf("(%f, %f, %f): %d -> %d\n", samples.at<double>(i, 0), samples.at<double>(i, 1), samples.at<double>(i, 2), sample_labels.at<int>(i), predicted_labels.at<int>(i));

}

Mat error = (sample_labels != predicted_labels) / 255;

printf("Error rate: %f\n", norm(error, NORM_L1) / error.rows);

Mat means = model->getMeans();

printf("Sample means: 0:(%f, %f, %f), 1:(%f, %f, %f)\n", means0.at<double>(0, 0), means0.at<double>(0, 1), means0.at<double>(0, 2), means0.at<double>(1, 0), means0.at<double>(1, 1), means0.at<double>(1, 2));

printf("Calculated means: 0:(%f, %f, %f), 1:(%f, %f, %f)\n", means.at<double>(0, 0), means.at<double>(0, 1), means.at<double>(0, 2), means.at<double>(1, 0), means.at<double>(1, 1), means.at<double>(1, 2));

Checking the data printed to the console, the calculated mean for each class is closest to the sample mean of the opposite class.

Sample means: 0:(184.912913, 192.435435, 185.291291), 1:(149.543210, 150.604938, 129.833333)

Calculated means: 0:(147.953284, 153.951035, 139.721160), 1:(209.889542, 214.448519, 206.625586)

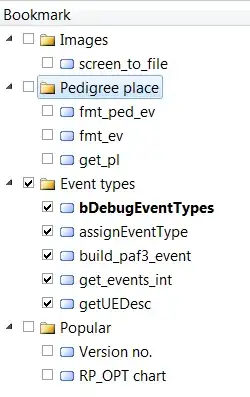

Here is a visualization of the sample data and the trained model, showing swapped classification. Red is class 0, blue is class 1, and covariance retrieval is bugged so the contours are circles rather than ellipses:

Is there a way to ensure that each Gaussian ends up optimizing on the samples it was created by, or otherwise is there a standard method to identify which class label belongs to each Gaussian?