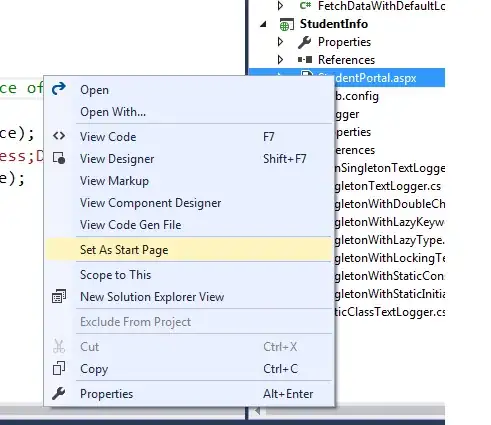

I am trying to approximate a function (the right hand side of a differential equation) of the form ddx=F(x,dx,u) (where x,dx,u are scalars and u is constant) with an RBF neural network. I have the function F as a black box (I can feed it with initial x,dx and u and take x and dx for a timespan I want) and during training (using sigma-modification) I am getting the following response plotting the real dx vs the approximated dx.

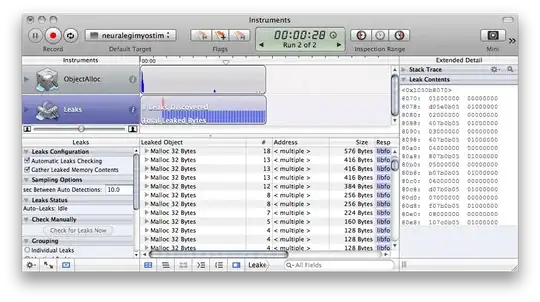

Then I save the parameters of the NN (the centers and the stds of the gaussians, and the final weights) and perform a simulation using the same initial x,dx and u as before and keeping, of course, the weights stable this time. But I get the following plot.

Is that logical? Am I missing something?

The training code is as follows:

%load the results I got from the real function

load sim_data t p pd dp %p is x,dp is dx and pd is u

real_states = [p,dp];

%down and upper limits of the variables

p_dl = 0;

p_ul = 2;

v_dl = -1;

v_ul = 4;

pd_dl = 0;%pd is constant each time,but the function should work for different pds

pd_ul = 2;

%number of gaussians

nc = 15;

x = p_dl:(p_ul-p_dl)/(nc-1):p_ul;

dx = v_dl:(v_ul-v_dl)/(nc-1):v_ul;

pdx = pd_dl:(pd_ul-pd_dl)/(nc-1):pd_ul;

%centers of gaussians

Cx = combvec(x,dx,pdx);

%stds of the gaussians

B = ones(1,3)./[2.5*(p_ul-p_dl)/(nc-1),2.5*(v_ul-v_dl)/(nc-1),2.5*(pd_ul-pd_dl)/(nc-1)];

nw = size(Cx,2);

wdx = zeros(nw,1);

state = real_states(1,[1,4]);%there are also y,dy,dz and z in real_states (ignored here)

states = zeros(length(t),2);

timestep = 0.005;

for step=1:length(t)

states(step,:) = state;

%compute the values of the sigmoids

Sx = exp(-1/2 * sum(((([real_states(step,1);real_states(step,4);pd(1)]*ones(1,nw))'-Cx').*(ones(nw,1)*B)).^2,2));

ddx = -530*state(2) + wdx'*Sx;

edx = state(2) - real_states(step,4);

dwdx = -1200*edx * Sx - 4 * wdx;

wdx = wdx + dwdx*timestep;

state = [state(1)+state(2)*timestep,state(2)+ddx*timestep];

end

save weights wdx Cx B

figure

plot(t,[dp(:,1),states(:,2)])

legend('x_d_o_t','x_d_o_t_h_a_t')

The code used to verify the approximation is the following:

load sim_data t p pd dp

real_states = [p,dp];

load weights wdx Cx B

nw = size(Cx,2);

state = real_states(1,[1,4]);

states = zeros(length(t),2);

timestep = 0.005;

for step=1:length(t)

states(step,:) = state;

Sx = exp(-1/2 * sum(((([real_states(step,1);real_states(step,4);pd(1)]*ones(1,nw))'-Cx').*(ones(nw,1)*B)).^2,2));

ddx = -530*state(2) + wdx'*Sx;

state = [state(1)+state(2)*timestep,state(2)+ddx*timestep];

end

figure

plot(t,[dp(:,1),states(:,2)])

legend('x_d_o_t','x_d_o_t_h_a_t')