I'm using sharded counters (https://cloud.google.com/appengine/articles/sharding_counters) in my GAE application for performance reasons, but I'm having some trouble understanding why it's so slow and how I can speed things up.

Background

I have an API that grabs a set of 20 objects at a time and for each object, it gets a total from a counter to include in the response.

Metrics

With Appstats turned on and a clear cache, I notice that getting the totals for 20 counters makes 120 RPCs by datastore_v3.Get which takes 2500ms.

Thoughts

This seems like quite a lot of RPC calls and quite a bit of time for reading just 20 counters. I assumed this would be faster and maybe that's where I'm wrong. Is it supposed to be faster than this?

Further Inspection

I dug into the stats a bit more, looking at these two lines in the get_count method:

all_keys = GeneralCounterShardConfig.all_keys(name)

for counter in ndb.get_multi(all_keys):

If I comment out the get_multi line, I see that there are 20 RPC calls by datastore_v3.Get totaling 185ms.

As expected, this leaves get_multi to be the culprit for 100 RPC calls by datastore_v3. Get taking upwards of 2500 ms. I verified this, but this is where I'm confused. Why does calling get_multi with 20 keys cause 100 RPC calls?

Update #1

I checked out Traces in the GAE console and saw some additional information. They show a breakdown of the RPC calls there as well - but in the sights they say to "Batch the gets to reduce the number of remote procedure calls." Not sure how to do that outside of using get_multi. Thought that did the job. Any advice here?

Update #2

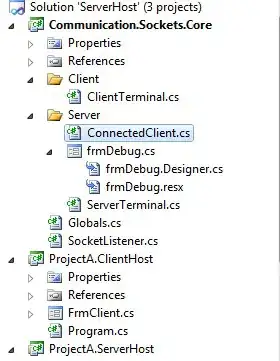

Here are some screen shots that show the stats I'm looking at. The first one is my base line - the function without any counter operations. The second one is after a call to get_count for just one counter. This shows a difference of 6 datastore_v3.Get RPCs.

After Calling get_count On One Counter

Update #3

Based on Patrick's request, I'm adding a screenshot of info from the console Trace tool.