I am using Delphi XE. My laptop has 2 graphic cards (INTEL and the other is NVIDIA).

I want to make my program use the strongest graphic card, which is in my case the NVIDIA.

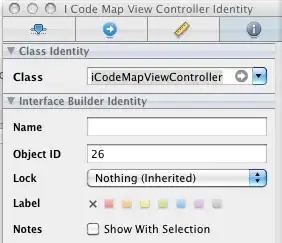

Some other programs get that automatically as you see below :

It looks like the graphic card driver (or windows) decides that this game should use the better graphic card.

So how can I decide the usage of the graphic cards by programming?