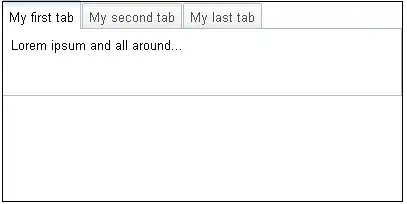

Below is a snapshot of the health issues reported on CM. The datanodes in the list keep changing. Some errors from the datanode logs :

3:59:31.859 PM ERROR org.apache.hadoop.hdfs.server.datanode.DataNode

datanode05.hadoop.com:50010:DataXceiver error processing WRITE_BLOCK operation src: /10.248.200.113:45252 dest: /10.248.200.105:50010

java.io.IOException: Premature EOF from inputStream

at org.apache.hadoop.io.IOUtils.readFully(IOUtils.java:194)

at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.doReadFully(PacketReceiver.java:213)

at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.doRead(PacketReceiver.java:134)

at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.receiveNextPacket(PacketReceiver.java:109)

at org.apache.hadoop.hdfs.server.datanode.BlockReceiver.receivePacket(BlockReceiver.java:414)

at org.apache.hadoop.hdfs.server.datanode.BlockReceiver.receiveBlock(BlockReceiver.java:635)

at org.apache.hadoop.hdfs.server.datanode.DataXceiver.writeBlock(DataXceiver.java:564)

at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.opWriteBlock(Receiver.java:103)

at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.processOp(Receiver.java:67)

at org.apache.hadoop.hdfs.server.datanode.DataXceiver.run(DataXceiver.java:221)

at java.lang.Thread.run(Thread.java:662)

5:46:03.606 PM INFO org.apache.hadoop.hdfs.server.datanode.DataNode

Exception for BP-846315089-10.248.200.4-1369774276029:blk_-780307518048042460_200374997

java.net.SocketTimeoutException: 60000 millis timeout while waiting for channel to be ready for read. ch : java.nio.channels.SocketChannel[connected local=/10.248.200.105:50010 remote=/10.248.200.122:43572]

at org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:165)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:156)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:129)

at java.io.BufferedInputStream.fill(BufferedInputStream.java:218)

at java.io.BufferedInputStream.read1(BufferedInputStream.java:258)

at java.io.BufferedInputStream.read(BufferedInputStream.java:317)

at java.io.DataInputStream.read(DataInputStream.java:132)

at org.apache.hadoop.io.IOUtils.readFully(IOUtils.java:192)

at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.doReadFully(PacketReceiver.java:213)

at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.doRead(PacketReceiver.java:134)

at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.receiveNextPacket(PacketReceiver.java:109)

at org.apache.hadoop.hdfs.server.datanode.BlockReceiver.receivePacket(BlockReceiver.java:414)

at org.apache.hadoop.hdfs.server.datanode.BlockReceiver.receiveBlock(BlockReceiver.java:635)

at org.apache.hadoop.hdfs.server.datanode.DataXceiver.writeBlock(DataXceiver.java:564)

at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.opWriteBlock(Receiver.java:103)

at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.processOp(Receiver.java:67)

at org.apache.hadoop.hdfs.server.datanode.DataXceiver.run(DataXceiver.java:221)

at java.lang.Thread.run(Thread.java:662)

Snapshot:

I am unable to figure out the root cause of the issue. I can manually connect from one datanode to another without issues, I don't believe it is a network issue. Also, the missing blocks and under-replicated block counts change (up & down) as well.

Cloudera Manager : Cloudera Standard 4.8.1

CDH 4.7

Any help in resolving this issue is appreciated.

Update: Jan 01, 2016

For the datanodes listed as bad, when I see the dadanode logs, I see this message a lot...

11:58:30.066 AM INFO org.apache.hadoop.hdfs.server.datanode.DataNode

Receiving BP-846315089-10.248.200.4-1369774276029:blk_-706861374092956879_36606459 src: /10.248.200.123:56795 dest: /10.248.200.112:50010

Why is this datanode receiving a lot of blocks from other datanodes around the same time? It seems that because of this activity the datanode cannot respond to the namenode request in time and thus timing out. All bad datanodes show the same pattern.