I have one illogical problem that I just can't figure out.

I am doing a complex query. After I did a little change it began executing over 2 minutes instead of one second. Can someone explain to me how is this even possible? What could be the background of this?

First query

DECLARE @CRUISE_ID int = 10001890 --:CRUISE_ID

SELECT

/* ... */

FROM Cruise_Itinerary with(nolock)

INNER JOIN Cruise with(nolock) ON Cruise_Itinerary.CRUISE_ID = Cruise.CRUISE_ID

AND (Cruise.CRUISE_ID = @CRUISE_ID)

/* ... */

Second query

DECLARE @CRUISE_ID int = 10001890 --:CRUISE_ID

SELECT

/* ... */

FROM Cruise_Itinerary with(nolock)

INNER JOIN Cruise with(nolock) ON Cruise_Itinerary.CRUISE_ID = Cruise.CRUISE_ID

AND (@CRUISE_ID is null OR Cruise.CRUISE_ID = @CRUISE_ID)

/* ... */

The first query executes in one second but the second one takes over 2 minutes to execute. I just don't get it. What is a difference between

AND (10001890 is null OR Cruise.CRUISE_ID = 10001890)

and

AND (@CRUISE_ID is null OR Cruise.CRUISE_ID = @CRUISE_ID)?

Variable @CRUISE_ID has no other occurrences is the entire query.

Edit: I figured it out with help of my colleagues and you guys.

Here is a good explanation what is going on: http://sqlinthewild.co.za/index.php/2009/03/19/catch-all-queries/

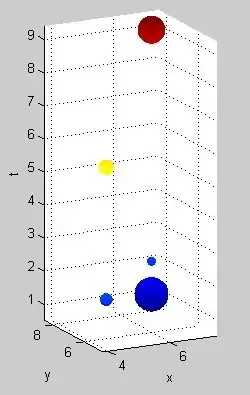

The optimal plan differs completely depending on what parameters are passed. The optimizer can't tell that and it plays safe. It creates plans that will always work. That’s (one of the reasons) why in the first example it was an index scan, not an index seek.

We can see it from the execution plan of the second query that the index scan happened at the end of plan. I checked. It takes over 2 minutes to execute if I remove this the whole condition.