Stay with the tried and trusted macros, even if we all knows that macros are to be avoided in general. The inline functions simply don't work. Alternatively – especially if you are using GCC – forget __builtin_expect altogether and use profile-guided optimization (PGO) with actual profiling data instead.

The __builtin_expect is quite special in that it doesn't actually “do” anything but merely hints the compiler towards what branch will most likely be taken. If you use the built-in in a context that is not a branching condition, the compiler would have to propagate this information along with the value. Intuitively, I would have expected this to happen. Interestingly, the documentation of GCC and Clang is not very explicit about this. However, my experiments show that Clang is obviously not propagating this information. As for GCC, I still have to find a program where it actually pays attention to the built-in so I cannot tell for sure. (Or, in other words, it doesn't matter anyway.)

I have tested the following function.

std::size_t

do_computation(std::vector<int>& numbers,

const int base_threshold,

const int margin,

std::mt19937& rndeng,

std::size_t *const hitsptr)

{

assert(base_threshold >= margin && base_threshold <= INT_MAX - margin);

assert(margin > 0);

benchmark::clobber_memory(numbers.data());

const auto jitter = make_jitter(margin - 1, rndeng);

const auto threshold = base_threshold + jitter;

auto count = std::size_t {};

for (auto& x : numbers)

{

if (LIKELY(x > threshold))

{

++count;

}

else

{

x += (1 - (x & 2));

}

}

benchmark::clobber_memory(numbers.data());

// My benchmarking framework swallows the return value so this trick with

// the pointer was needed to get out the result. It should have no effect

// on the measurement.

if (hitsptr != nullptr)

*hitsptr += count;

return count;

}

make_jitter simply returns a random integer in the range [−m, m] where m is its first argument.

int

make_jitter(const int margin, std::mt19937& rndeng)

{

auto rnddist = std::uniform_int_distribution<int> {-margin, margin};

return rnddist(rndeng);

}

benchmark::clobber_memory is a no-op that denies the compiler to optimize the modifications of the vector's data away. It is implemented like this.

inline void

clobber_memory(void *const p) noexcept

{

asm volatile ("" : : "rm"(p) : "memory");

}

The declaration of do_computation was annotated with __attribute__ ((hot)). It turned out that this influences how much optimizations the compiler applies a lot.

The code for do_computation was crafted such that either branch had comparable cost, giving slightly more cost to the case where the expectation was not met. It was also made sure that the compiler would not generate a vectorized loop for which branching would be immaterial.

For the benchmark, a vector numbers of 100 000 000 random integers from the range [0, INT_MAX] and a random base_threshold form the interval [0, INT_MAX − margin] (with margin set to 100) was generated with a non-deterministically seeded pseudo random number generator. do_computation(numbers, base_threshold, margin, …) (compiled in a separate translation unit) was called four times and the execution time for each run measured. The result of the first run was discarded to eliminate cold-cache effects. The average and standard deviation of the remaining runs was plotted against the hit-rate (the relative frequency with which the LIKELY annotation was correct). The “jitter” was added to make the outcome of the four runs not the same (otherwise, I'd be afraid of too smart compilers) while still keeping the hit-rates essentially fixed. 100 data points were collected in this way.

I have compiled three different versions of the program with both GCC 5.3.0 and Clang 3.7.0 passing them the -DNDEBUG, -O3 and -std=c++14 flags. The versions differ only in the way LIKELY is defined.

// 1st version

#define LIKELY(X) static_cast<bool>(X)

// 2nd version

#define LIKELY(X) __builtin_expect(static_cast<bool>(X), true)

// 3rd version

inline bool

LIKELY(const bool x) noexcept

{

return __builtin_expect(x, true);

}

Although conceptually three different versions, I have compared 1st versus 2nd and 1st versus 3rd. The data for 1st was therefore essentially collected twice. 2nd and 3rd are referred to as “hinted”

in the plots.

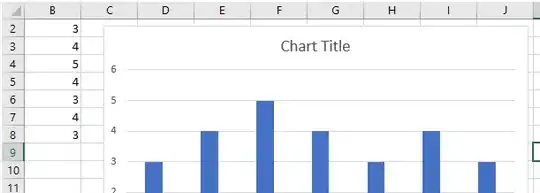

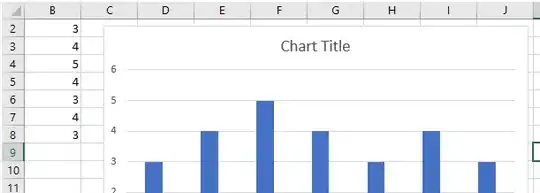

The horizontal axis of the following plots shows the hit-rate for the LIKELY annotation and the vertical axis shows the averaged CPU time per iteration of the loop.

Here is the plot for 1st versus 2nd.

As you can see, GCC effectively ignores the hint, producing equally performing code regardless whether the hint was given or not. Clang, on the other hand, clearly pays attention to the hint. If the hit-rate drops low (ie, the hint was wrong), the code is penalized but for high hit-rates (ie, the hint was good), the code outperforms the one generated by GCC.

In case you are wondering about the hill-shaped nature of the curve: that's the hardware branch predictor at work! It has nothing to do with the compiler. Also note how this effect completely dwarfs the effects of the __builtin_expect, which might be a reason for not worrying too much about it.

In contrast, here is the plot for 1st versus 3rd.

Both compilers produce code that essentially performs equal. For GCC, this doesn't say much but as far as Clang is concerned, the __builtin_expect doesn't seem to be taken into account when wrapped in a function which makes it loose against GCC for all hit-rates.

So, in conclusion, don't use functions as wrappers. If the macro is written correctly, it is not dangerous. (Apart from polluting the name-space.) __builtin_expect already behaves (at least as far as evaluation of its arguments is concerned) like a function. Wrapping a function call in a macro has no surprising effects on the evaluation of its argument.

I realize that this wasn't your question so I'll keep it short but in general, prefer collecting actual profiling data over guessing likely branches by hand. The data will be more accurate and GCC will pay more attention to it.