The problem description is here:

Asked

Active

Viewed 163 times

0

Enamul Hassan

- 5,266

- 23

- 39

- 56

user5694458

- 1

- 1

-

That doesn't really work either. In any case, I suspect this question would work better on mathoverflow or cs.stackexchange.com – harold Dec 18 '15 at 10:09

-

Since your problem is convex, bounded from below, and contain no additional constraints, a local optima is global, an can be found at a stationary point; namely where the gradient of your function is zero. Look into "sufficient conditions of local optimality": since you've already shown that the gradient converges to zero, the result of should follow. – dfrib Dec 18 '15 at 11:16

-

@dfri As I have said in the question, if we assume the optimum point of convex function is attainable, namely this point is not infinity, then we can have F approaches infimum when |g| approaches 0. But considering f(x) = exp(-x) for example, you will see the hard core of this question. – user5694458 Dec 19 '15 at 11:57

-

@harold Thanks, I have posted this question on cs.stackexchange.com, unfortunately, I have not received an answer yet. What do you mean "doesn't really work"? Do you mean we can not have f(x_k) approaching the Inf of f(x) given the gradient g(x_k) approaching zero in general? – user5694458 Dec 19 '15 at 12:01

-

@user5694458 I meant the format, as a picture, well I guess it kind of works but it's not how it's supposed to be done. I can't find the question there by the way – harold Dec 19 '15 at 12:23

-

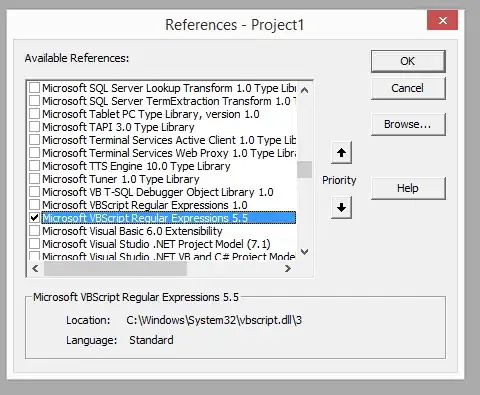

@harold Sorry, I posted it on scicomp.stackexchange.com (http://scicomp.stackexchange.com/questions/21607/conditions-of-convergence-of-convex-optimization). The scicomp.stackexchange.com supports latex format. It seems that stack overflow doesn't support latex. Therefore, I post it as a picture to keep the formula. – user5694458 Dec 19 '15 at 12:32