tl;dr: why does key lookup in sparse_hash_map become about 50x slower for specific data?

I am testing the speed of key lookups for sparse_hash_map from Google's sparsehash library using a very simple Cython wrapper I've written. The hashtable contains uint32_t keys and uint16_t values. For random keys, values and queries I am getting more than 1M lookups/sec. However, for the specific data I need the performance barely exceeds 20k lookups/sec.

The wrapper is here. The table which runs slowly is here. The benchmarking code is:

benchmark.pyx:

from sparsehash cimport SparseHashMap

from libc.stdint cimport uint32_t

from libcpp.vector cimport vector

import time

import numpy as np

def fill_randomly(m, size):

keys = np.random.random_integers(0, 0xFFFFFFFF, size)

# 0 is a special domain-specific value

values = np.random.random_integers(1, 0xFFFF, size)

for j in range(size):

m[keys[j]] = values[j]

def benchmark_get():

cdef int dummy

cdef uint32_t i, j, table_key

cdef SparseHashMap m

cdef vector[uint32_t] q_keys

cdef int NUM_QUERIES = 1000000

cdef uint32_t MAX_REQUEST = 7448 * 2**19 - 1 # this is domain-specific

time_start = time.time()

### OPTION 1 ###

m = SparseHashMap('17.shash')

### OPTION 2 ###

# m = SparseHashMap(16130443)

# fill_randomly(m, 16130443)

q_keys = np.random.random_integers(0, MAX_REQUEST, NUM_QUERIES)

print("Initialization: %.3f" % (time.time() - time_start))

dummy = 0

time_start = time.time()

for i in range(NUM_QUERIES):

table_key = q_keys[i]

dummy += m.get(table_key)

dummy %= 0xFFFFFF # to prevent overflow error

time_elapsed = time.time() - time_start

if dummy == 42:

# So that the unused variable is not optimized away

print("Wow, lucky!")

print("Table size: %d" % len(m))

print("Total time: %.3f" % time_elapsed)

print("Seconds per query: %.8f" % (time_elapsed / NUM_QUERIES))

print("Queries per second: %.1f" % (NUM_QUERIES / time_elapsed))

def main():

benchmark_get()

benchmark.pyxbld (because pyximport should compile in C++ mode):

def make_ext(modname, pyxfilename):

from distutils.extension import Extension

return Extension(

name=modname,

sources=[pyxfilename],

language='c++'

)

run.py:

import pyximport

pyximport.install()

import benchmark

benchmark.main()

The results for 17.shash are:

Initialization: 2.612

Table size: 16130443

Total time: 48.568

Seconds per query: 0.00004857

Queries per second: 20589.8

and for random data:

Initialization: 25.853

Table size: 16100260

Total time: 0.891

Seconds per query: 0.00000089

Queries per second: 1122356.3

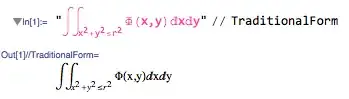

The key distribution in 17.shash is this (plt.hist(np.fromiter(m.keys(), dtype=np.uint32, count=len(m)), bins=50)):

From the documentation on sparsehash and gcc it seems that trivial hashing is used here (that is, x hashes to x).

Is there anything obvious that could be causing this behavior besides hash collisions? From what I have found, it is non-trivial to integrate a custom hash function (i.e. overload std::hash<uint32_t>) in a Cython wrapper.