I am trying to use mclapply to parallelize cross-validation for modeling fitting procedure for very large design matrix X (~10GB) and response vector y. Let's say X is of dimension n-by-p: n=1000, p=1,000,000. Since X is very huge, it's backed as big.matrix object, stored in disk, and accessed using methods in R package bigmemory.

The workflow for a 4-fold cross validation is as follows.

- Set up indices vector

cv.indwith lengthn, which stores a sequence of numbers from 1 to 4, indicating which observation inXbelongs to which fold for CV. - Set up 4 cores. In the i-th core, copy corresponding training and test sub matrices for the i-th fold CV.

- Fit a model for each fold in each core.

- Collect results, return.

The cross-validation function looks like following.

cv.ncvreg <- function(X, y, ncore, nfolds=5, seed, cv.dir = getwd(),

cv.ind) {

## some more setup ...

## ...

## ...

## pass the descriptor info to each core ##

xdesc <- describe(X)

## use mclapply instead of parLapply

fold.results <- parallel::mclapply(X = 1:nfolds, FUN = cvf, XX=xdesc, y=y,

cv.dir = cv.dir, cv.ind=cv.ind,

cv.args=cv.args,

mc.set.seed = seed, mc.silent = F,

mc.cores = ncore, mc.preschedule = F)

## return results

}

The R function cvf is run in each core. It copies training/test matrices for i-th fold as two big.matrix objects, fits a model, computes some statistics, and returns results.

cvf <- function(i, XX, y, cv.dir, cv.ind, cv.args) {

## argument 'XX' is the descriptor for big.matrix

# reference to the big.matrix by descriptor info

XX <- attach.big.matrix(XX)

cat("CV fold #", i, "\t--Copy training-- Start time: ", format(Sys.time()), "\n\n")

## physically copy sub big.matrix for training

idx.train <- which(cv.ind != i) ## get row idx for i-th fold training

deepcopy(XX, rows = idx.train, type = 'double',

backingfile = paste0('x.cv.train_', i, '.bin'),

descriptorfile = paste0('x.cv.train_', i, '.desc'),

backingpath = cv.dir)

cv.args$X <- attach.big.matrix(paste0(cv.dir, 'x.cv.train_', i, '.desc'))

cat("CV fold #", i, "\t--Copy training-- End time: ", format(Sys.time()), "\n\n")

cat("CV fold #", i, "\t--Copy test-- Start time: ", format(Sys.time()), "\n\n")

## physically copy remaining part of big.matrix for testing

idx.test <- which(cv.ind == i) ## get row idx for i-th fold testing

deepcopy(XX, rows = idx.test, type = 'double',

backingfile = paste0('x.cv.test_', i, '.bin'),

descriptorfile = paste0('x.cv.test_', i, '.desc'),

backingpath = cv.dir)

X2 <- attach.big.matrix(paste0(cv.dir, 'x.cv.test_', i, '.desc'))

cat("CV fold #", i, "\t--Copy test-- End time: ", format(Sys.time()), "\n\n")

# cv.args$X <- XX[cv.ind!=i, , drop=FALSE]

cv.args$y <- y[cv.ind!=i]

cv.args$warn <- FALSE

cat("CV fold #", i, "\t--Fit ncvreg-- Start time: ", format(Sys.time()), "\n\n")

## call 'ncvreg' function, fit penalized regression model

fit.i <- ncvreg(X=cv.args$X, y=cv.args$y, family=cv.args$family,

penalty = cv.args$penalty,lambda = cv.args$lambda, convex = cv.args$convex)

# fit.i <- do.call("ncvreg", cv.args)

cat("CV fold #", i, "\t--Fit ncvreg-- End time: ", format(Sys.time()), "\n\n")

y2 <- y[cv.ind==i]

yhat <- matrix(predict(fit.i, X2, type="response"), length(y2))

loss <- loss.ncvreg(y2, yhat, fit.i$family)

pe <- if (fit.i$family=="binomial") {(yhat < 0.5) == y2} else NULL

list(loss=loss, pe=pe, nl=length(fit.i$lambda), yhat=yhat)

}

Up to this point, the code works very well when the design matrix X is not too large, say n=1000, p=100,000 with size ~1GB. However, if p=1,000,000 hence the size of X becomes ~10GB, the model fitting procedure in each core runs like forever!!!!! (the following portion):

#...

cat("CV fold #", i, "\t--Fit ncvreg-- Start time: ", format(Sys.time()), "\n\n")

## call 'ncvreg' function, fit penalized regression model

fit.i <- ncvreg(X=cv.args$X, y=cv.args$y, family=cv.args$family,

penalty = cv.args$penalty,lambda = cv.args$lambda, convex = cv.args$convex)

# fit.i <- do.call("ncvreg", cv.args)

cat("CV fold #", i, "\t--Fit ncvreg-- End time: ", format(Sys.time()), "\n\n")

#...

Notes:

- If I run 'ncvreg()' on the raw matrix (10GB) once, it takes ~2.5 minutes.

- If I run the cross validation sequentially using

forloop but notmclapply, the code works well, the model fitting for each fold 'ncvreg()' works fine too (~2 minutes), though the whole process takes ~25 minutes. - I tried 'parLapply' at first with the same issue. I switched to 'mclapply' due to reason here.

- The data copy step (i.e.,

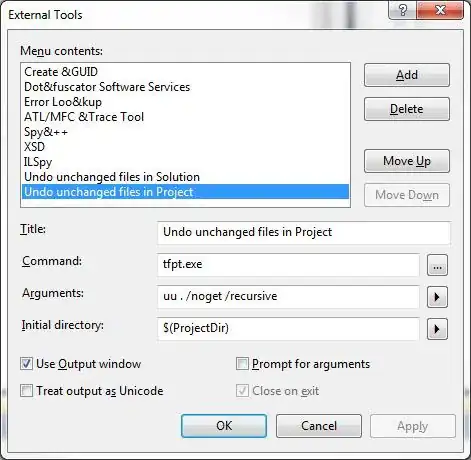

deepcopyportion) in each core works well, and takes ~2 mins to get the training and test data sets copied and file-backed on disk. - I tried to monitor the CPU usage, below is one screenshot. As we can see, in the left figure, each of 4 rsessions takes ~25% CPU usage, while there is a process, kernel_task uses up ~100% CPU. As time elapses, kernel_task can take even 150% CPU. In addition, the CPU history panel (right bottom) shows that most of the CPU usage is from system, not user, since the red area dominates green area.

My questions:

- Why does the model fitting process take forever when in parallel? What could be possible reasons?

- Am I on the right track to parallelize the CV procedure for big.matrix? Any alternative ways?

I appreciate any insights for helping fix my issue here. Thanks in advance!!